前言

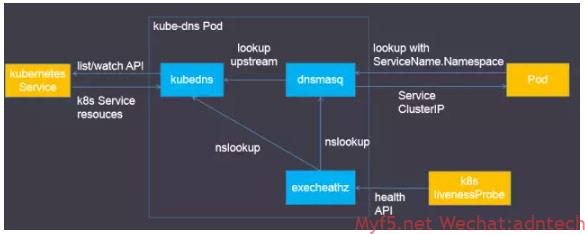

kubernetes 1.6.7已经废弃kube2sky的dns模式. 本测试使用官方的yaml文件创建。下图描述了kube-dns的组件结构:

(exechealthz-->sidecar)

kubedns 接管了以前的skydns,并使用一个树状结构保存从k8s API获取到的信息。 dnsmasq为dns pod提供解析接入服务,并作为一个dns cache保护kubedns。

sidecar执行域名检查监控kubedns和 dnsmasq是否正常提供解析:

|

1 2 3 |

- --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local.,5,A - --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local.,5,A |

yaml文件准备

进入之前下载并解压的1.6.7压缩包的 kubernetes/cluster/addons/dns/目录下

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

[root@docker1 dns]# pwd /root/kubernetes/cluster/addons/dns [root@docker1 dns]# ll total 64 -rw-r-----. 1 root root 731 Jul 6 01:05 kubedns-cm.yaml -rw-r-----. 1 root root 5372 Jul 6 01:05 kubedns-controller.yaml.base -rw-r-----. 1 root root 5459 Jul 6 01:05 kubedns-controller.yaml.in -rw-r-----. 1 root root 5352 Jul 6 01:05 kubedns-controller.yaml.sed -rw-r-----. 1 root root 187 Jul 6 01:05 kubedns-sa.yaml -rw-r-----. 1 root root 1037 Jul 6 01:05 kubedns-svc.yaml.base -rw-r-----. 1 root root 1106 Jul 6 01:05 kubedns-svc.yaml.in -rw-r-----. 1 root root 1094 Jul 6 01:05 kubedns-svc.yaml.sed -rw-r-----. 1 root root 1138 Jul 6 01:05 Makefile -rw-r-----. 1 root root 56 Jul 6 01:05 OWNERS -rw-r-----. 1 root root 2106 Jul 6 01:05 README.md -rw-r-----. 1 root root 318 Jul 6 01:05 transforms2salt.sed -rw-r-----. 1 root root 251 Jul 6 01:05 transforms2sed.sed |

将kubedns-cm.yaml, kubedns-sa.yaml,kubedns-controller.yaml.in,kubedns-svc.yaml.in 四个文件拷贝/root/kubedns目录下,并按如下重命名:

|

1 2 3 4 5 6 7 |

[root@docker1 dns]# cd /root/kubedns [root@docker1 kubedns]# ll total 20 -rw-r-----. 1 root root 731 Jul 9 22:50 kubedns-cm.yaml -rw-r-----. 1 root root 5411 Jul 9 23:05 kubedns-controller.yaml -rw-r-----. 1 root root 187 Jul 9 22:50 kubedns-sa.yaml -rw-r-----. 1 root root 1092 Jul 9 23:06 kubedns-svc.yaml |

配置文件修改

修改kubedns-controller.yaml这个 deployment类型配置文件,主要是将pillar['dns_domain'] 替换为cluster.local,以及如何连接到master,具体可以比较以下已经修改好的文件:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 |

[root@docker1 kubedns]# cat kubedns-controller.yaml # Copyright 2016 The Kubernetes Authors. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # Should keep target in cluster/addons/dns-horizontal-autoscaler/dns-horizontal-autoscaler.yaml # in sync with this file. # Warning: This is a file generated from the base underscore template file: kubedns-controller.yaml.base apiVersion: extensions/v1beta1 kind: Deployment metadata: name: kube-dns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: # replicas: not specified here: # 1. In order to make Addon Manager do not reconcile this replicas parameter. # 2. Default is 1. # 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on. strategy: rollingUpdate: maxSurge: 10% maxUnavailable: 0 selector: matchLabels: k8s-app: kube-dns template: metadata: labels: k8s-app: kube-dns annotations: scheduler.alpha.kubernetes.io/critical-pod: '' spec: tolerations: - key: "CriticalAddonsOnly" operator: "Exists" volumes: - name: kube-dns-config configMap: name: kube-dns optional: true containers: - name: kubedns image: gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.4 resources: # TODO: Set memory limits when we've profiled the container for large # clusters, then set request = limit to keep this container in # guaranteed class. Currently, this container falls into the # "burstable" category so the kubelet doesn't backoff from restarting it. limits: memory: 170Mi requests: cpu: 100m memory: 70Mi livenessProbe: httpGet: path: /healthcheck/kubedns port: 10054 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: httpGet: path: /readiness port: 8081 scheme: HTTP # we poll on pod startup for the Kubernetes master service and # only setup the /readiness HTTP server once that's available. initialDelaySeconds: 3 timeoutSeconds: 5 args: - --domain=cluster.local. - --dns-port=10053 - --config-dir=/kube-dns-config - --v=2 #{{ pillar['federations_domain_map'] }} - --kube-master-url=http://172.16.199.17:8080 env: - name: PROMETHEUS_PORT value: "10055" ports: - containerPort: 10053 name: dns-local protocol: UDP - containerPort: 10053 name: dns-tcp-local protocol: TCP - containerPort: 10055 name: metrics protocol: TCP volumeMounts: - name: kube-dns-config mountPath: /kube-dns-config - name: dnsmasq image: gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.4 livenessProbe: httpGet: path: /healthcheck/dnsmasq port: 10054 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 args: - -v=2 - -logtostderr - -configDir=/etc/k8s/dns/dnsmasq-nanny - -restartDnsmasq=true - -- - -k - --cache-size=1000 - --log-facility=- - --server=/cluster.local./127.0.0.1#10053 - --server=/in-addr.arpa/127.0.0.1#10053 - --server=/ip6.arpa/127.0.0.1#10053 ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP # see: https://github.com/kubernetes/kubernetes/issues/29055 for details resources: requests: cpu: 150m memory: 20Mi volumeMounts: - name: kube-dns-config mountPath: /etc/k8s/dns/dnsmasq-nanny - name: sidecar image: gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.4 livenessProbe: httpGet: path: /metrics port: 10054 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 args: - --v=2 - --logtostderr - --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local.,5,A - --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local.,5,A ports: - containerPort: 10054 name: metrics protocol: TCP resources: requests: memory: 20Mi cpu: 10m dnsPolicy: Default # Don't use cluster DNS. serviceAccountName: kube-dns |

修改service yaml文件,主要修改clusterIP为kubelet服务启动时候所指定的dns IP

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 |

[root@docker1 kubedns]# cat kubedns-svc.yaml # Copyright 2016 The Kubernetes Authors. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # Warning: This is a file generated from the base underscore template file: kubedns-svc.yaml.base apiVersion: v1 kind: Service metadata: name: kube-dns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "KubeDNS" spec: selector: k8s-app: kube-dns clusterIP: 169.169.0.53 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 protocol: TCP |

docker镜像pull

由于上述配置文件里的镜像pull地址是google的服务器地址,中国访问,你懂得,因此需要先人工从其他镜像站点拉取。我已经将三个所要的镜像build到了docker hub里,直接拉取后重新tag即可,注意下面的命令需要在所有node上执行:

|

1 2 3 4 |

docker pull myf5/k8s-dns-kube-dns-amd64:1.14.4 docker pull myf5/k8s-dns-dnsmasq-nanny-amd64:1.14.4 docker pull myf5/k8s-dns-sidecar-amd64:1.14.4 docker pull myf5/pause-amd64:3.0 |

|

1 2 3 4 |

docker tag myf5/k8s-dns-kube-dns-amd64:1.14.4 gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.4 docker tag myf5/k8s-dns-dnsmasq-nanny-amd64:1.14.4 gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.4 docker tag myf5/k8s-dns-sidecar-amd64:1.14.4 gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.4 docker tag myf5/pause-amd64:3.0 gcr.io/google_containers/pause-amd64:3.0 |

执行上述定义的yaml文件

|

1 2 |

cd /root/kubedns kubectl create -f . |

检验

|

1 2 3 |

[root@docker1 kubedns]# kubectl get pod -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE kube-dns-1099564669-hv1pj 3/3 Running 0 1m 10.2.39.2 172.16.199.37ontainers/k8s-dns-dnsmasq-nanny-amd64:1.14.4,gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.4 k8s-app=kube-dns,pod-template-hash=1099564669 |

以下输出可以看到在弄的172.16.199.37上,pod获得了一个flannel网络分配的IP地址10.2.39.2

|

1 2 3 4 5 6 7 8 9 10 11 12 |

[root@docker1 kubedns]# kubectl get all -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE po/kube-dns-1099564669-hv1pj 3/3 Running 0 1m 10.2.39.2 172.16.199.37 NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR svc/kube-dns 169.169.0.53 <none> 53/UDP,53/TCP 2h k8s-app=kube-dns NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE CONTAINER(S) IMAGE(S) SELECTOR deploy/kube-dns 1 1 1 1 1m kubedns,dnsmasq,sidecar gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.4,gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.4,gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.4 k8s-app=kube-dns NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR rs/kube-dns-1099564669 1 1 1 1m kubedns,dnsmasq,sidecar gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.4,gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.4,gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.4 k8s-app=kube-dns,pod-template-hash=1099564669 |

相关节点上的docker ps

|

1 2 3 4 5 6 |

[root@docker3 ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 692db72631b3 myf5/k8s-dns-kube-dns-amd64 "/kube-dns --domai..." 4 minutes ago Up 4 minutes k8s_kubedns_kube-dns-1099564669-hv1pj_kube-system_07a6d751-64cd-11e7-ae06-000c29420d98_0 f4f3cd1b8ac4 myf5/k8s-dns-sidecar-amd64 "/sidecar --v=2 --..." 4 minutes ago Up 4 minutes k8s_sidecar_kube-dns-1099564669-hv1pj_kube-system_07a6d751-64cd-11e7-ae06-000c29420d98_0 2085fa64a5f0 myf5/k8s-dns-dnsmasq-nanny-amd64 "/dnsmasq-nanny -v..." 4 minutes ago Up 4 minutes k8s_dnsmasq_kube-dns-1099564669-hv1pj_kube-system_07a6d751-64cd-11e7-ae06-000c29420d98_0 a77f03e6ab19 gcr.io/google_containers/pause-amd64:3.0 "/pause" 4 minutes ago Up 4 minutes k8s_POD_kube-dns-1099564669-hv1pj_kube-system_07a6d751-64cd-11e7-ae06-000c29420d98_0 |

快速验证pod是否正常提供dns服务:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

[root@docker1 kubedns]# dig @10.2.39.2 cluster.local. SOA ; <<>> DiG 9.9.4-RedHat-9.9.4-38.el7_3.3 <<>> @10.2.39.2 cluster.local. SOA ; (1 server found) ;; global options: +cmd ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 1762 ;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0 ;; QUESTION SECTION: ;cluster.local. IN SOA ;; ANSWER SECTION: cluster.local. 3600 IN SOA ns.dns.cluster.local. hostmaster.cluster.local. 1499619600 28800 7200 604800 60 ;; Query time: 1 msec ;; SERVER: 10.2.39.2#53(10.2.39.2) ;; WHEN: Mon Jul 10 01:55:15 CST 2017 ;; MSG SIZE rcvd: 85 |

pod输出:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 |

[root@docker1 ~]# kubectl get pods -n kube-system -o yaml apiVersion: v1 items: - apiVersion: v1 kind: Pod metadata: annotations: kubernetes.io/created-by: | {"kind":"SerializedReference","apiVersion":"v1","reference":{"kind":"ReplicaSet","namespace":"kube-system","name":"kube-dns-1099564669","uid":"07a5d7d9-64cd-11e7-ae06-000c29420d98","apiVersion":"extensions","resourceVersion":"8433"}} scheduler.alpha.kubernetes.io/critical-pod: "" creationTimestamp: 2017-07-09T17:35:41Z generateName: kube-dns-1099564669- labels: k8s-app: kube-dns pod-template-hash: "1099564669" name: kube-dns-1099564669-hv1pj namespace: kube-system ownerReferences: - apiVersion: extensions/v1beta1 blockOwnerDeletion: true controller: true kind: ReplicaSet name: kube-dns-1099564669 uid: 07a5d7d9-64cd-11e7-ae06-000c29420d98 resourceVersion: "8461" selfLink: /api/v1/namespaces/kube-system/pods/kube-dns-1099564669-hv1pj uid: 07a6d751-64cd-11e7-ae06-000c29420d98 spec: containers: - args: - --domain=cluster.local. - --dns-port=10053 - --config-dir=/kube-dns-config - --v=2 - --kube-master-url=http://172.16.199.17:8080 env: - name: PROMETHEUS_PORT value: "10055" image: gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.4 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 5 httpGet: path: /healthcheck/kubedns port: 10054 scheme: HTTP initialDelaySeconds: 60 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 5 name: kubedns ports: - containerPort: 10053 name: dns-local protocol: UDP - containerPort: 10053 name: dns-tcp-local protocol: TCP - containerPort: 10055 name: metrics protocol: TCP readinessProbe: failureThreshold: 3 httpGet: path: /readiness port: 8081 scheme: HTTP initialDelaySeconds: 3 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 5 resources: limits: memory: 170Mi requests: cpu: 100m memory: 70Mi terminationMessagePath: /dev/termination-log terminationMessagePolicy: File volumeMounts: - mountPath: /kube-dns-config name: kube-dns-config - args: - -v=2 - -logtostderr - -configDir=/etc/k8s/dns/dnsmasq-nanny - -restartDnsmasq=true - -- - -k - --cache-size=1000 - --log-facility=- - --server=/cluster.local./127.0.0.1#10053 - --server=/in-addr.arpa/127.0.0.1#10053 - --server=/ip6.arpa/127.0.0.1#10053 image: gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.4 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 5 httpGet: path: /healthcheck/dnsmasq port: 10054 scheme: HTTP initialDelaySeconds: 60 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 5 name: dnsmasq ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP resources: requests: cpu: 150m memory: 20Mi terminationMessagePath: /dev/termination-log terminationMessagePolicy: File volumeMounts: - mountPath: /etc/k8s/dns/dnsmasq-nanny name: kube-dns-config - args: - --v=2 - --logtostderr - --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local.,5,A - --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local.,5,A image: gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.4 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 5 httpGet: path: /metrics port: 10054 scheme: HTTP initialDelaySeconds: 60 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 5 name: sidecar ports: - containerPort: 10054 name: metrics protocol: TCP resources: requests: cpu: 10m memory: 20Mi terminationMessagePath: /dev/termination-log terminationMessagePolicy: File dnsPolicy: Default nodeName: 172.16.199.37 restartPolicy: Always schedulerName: default-scheduler securityContext: {} serviceAccount: kube-dns serviceAccountName: kube-dns terminationGracePeriodSeconds: 30 tolerations: - key: CriticalAddonsOnly operator: Exists volumes: - configMap: defaultMode: 420 name: kube-dns optional: true name: kube-dns-config status: conditions: - lastProbeTime: null lastTransitionTime: 2017-07-09T17:35:41Z status: "True" type: Initialized - lastProbeTime: null lastTransitionTime: 2017-07-09T17:35:48Z status: "True" type: Ready - lastProbeTime: null lastTransitionTime: 2017-07-09T17:35:41Z status: "True" type: PodScheduled containerStatuses: - containerID: docker://2085fa64a5f04d401584e3d759236a68e472f211fc2b42060f02f045627fc621 image: myf5/k8s-dns-dnsmasq-nanny-amd64:1.14.4 imageID: docker-pullable://myf5/k8s-dns-dnsmasq-nanny-amd64@sha256:8e7a9752871ad5cef8b9a6f40c9227ec802d397930fdad0468733bdd70da6357 lastState: {} name: dnsmasq ready: true restartCount: 0 state: running: startedAt: 2017-07-09T17:35:43Z - containerID: docker://692db72631b3a1640a04165b16700f5611327e541600745e5e1eef7b53ca7a75 image: myf5/k8s-dns-kube-dns-amd64:1.14.4 imageID: docker-pullable://myf5/k8s-dns-kube-dns-amd64@sha256:93e31e20aa1feeed54d592c0f071b3d056ab834e676bb9fde54fd72918508796 lastState: {} name: kubedns ready: true restartCount: 0 state: running: startedAt: 2017-07-09T17:35:44Z - containerID: docker://f4f3cd1b8ac47c3b6ad4b8eed72491562a6c167831b1e92f2f866f69f395ee76 image: myf5/k8s-dns-sidecar-amd64:1.14.4 imageID: docker-pullable://myf5/k8s-dns-sidecar-amd64@sha256:975026e333c87138221a07312f35b943a244545cc7b746fb32d066297a91b41c lastState: {} name: sidecar ready: true restartCount: 0 state: running: startedAt: 2017-07-09T17:35:43Z hostIP: 172.16.199.37 phase: Running podIP: 10.2.39.2 qosClass: Burstable startTime: 2017-07-09T17:35:41Z kind: List metadata: {} resourceVersion: "" selfLink: "" |

附录排错:

由于忘记拉去pause镜像到本地,导致pod无法起来了,总是现在 containercreating状态:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

[root@docker1 kubernetes]# kubectl get all -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE po/kube-dns-2139945439-kp1v7 0/3 ContainerCreating 0 24m <none> 172.16.199.37 NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR svc/kube-dns 169.169.0.53 <none> 53/UDP,53/TCP 1h k8s-app=kube-dns NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE CONTAINER(S) IMAGE(S) SELECTOR deploy/kube-dns 1 1 1 0 24m kubedns,dnsmasq,sidecar gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.4,gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.4,gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.4 k8s-app=kube-dns NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR rs/kube-dns-2139945439 1 1 0 24m kubedns,dnsmasq,sidecar gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.4,gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.4,gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.4 k8s-app=kube-dns,pod-template-hash=2139945439 |

此时,kubectl get events -n kube-system显示了原因:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

[root@docker1 kubernetes]# kubectl get events -n kube-system LASTSEEN FIRSTSEEN COUNT NAME KIND SUBOBJECT TYPE REASON SOURCE MESSAGE 33m 1h 173 kube-dns-2139945439-6zqt3 Pod Warning FailedSync kubelet, 172.16.199.37 Error syncing pod, skipping: failed to "CreatePodSandbox" for "kube-dns-2139945439-6zqt3_kube-system(7a27e066-64b8-11e7-ae06-000c29420d98)" with CreatePodSandboxError: "CreatePodSandbox for pod \"kube-dns-2139945439-6zqt3_kube-system(7a27e066-64b8-11e7-ae06-000c29420d98)\" failed: rpc error: code = 2 desc = unable to pull sandbox image \"gcr.io/google_containers/pause-amd64:3.0\": Error response from daemon: {\"message\":\"Get https://gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)\"}" 25m 25m 1 kube-dns-2139945439-bg2fk Pod Normal Scheduled default-scheduler Successfully assigned kube-dns-2139945439-bg2fk to 172.16.199.27 23m 24m 2 kube-dns-2139945439-bg2fk Pod Warning FailedSync kubelet, 172.16.199.27 Error syncing pod, skipping: failed to "CreatePodSandbox" for "kube-dns-2139945439-bg2fk_kube-system(1ff21307-64c5-11e7-ae06-000c29420d98)" with CreatePodSandboxError: "CreatePodSandbox for pod \"kube-dns-2139945439-bg2fk_kube-system(1ff21307-64c5-11e7-ae06-000c29420d98)\" failed: rpc error: code = 2 desc = unable to pull sandbox image \"gcr.io/google_containers/pause-amd64:3.0\": Error response from daemon: {\"message\":\"Get https://gcr.io/v1/_ping: dial tcp 108.177.97.82:443: i/o timeout\"}" 22m 22m 1 kube-dns-2139945439-kp1v7 Pod Normal Scheduled default-scheduler Successfully assigned kube-dns-2139945439-kp1v7 to 172.16.199.37 12s 22m 48 kube-dns-2139945439-kp1v7 Pod Warning FailedSync kubelet, 172.16.199.37 Error syncing pod, skipping: failed to "CreatePodSandbox" for "kube-dns-2139945439-kp1v7_kube-system(752d819e-64c5-11e7-ae06-000c29420d98)" with CreatePodSandboxError: "CreatePodSandbox for pod \"kube-dns-2139945439-kp1v7_kube-system(752d819e-64c5-11e7-ae06-000c29420d98)\" failed: rpc error: code = 2 desc = unable to pull sandbox image \"gcr.io/google_containers/pause-amd64:3.0\": Error response from daemon: {\"message\":\"Get https://gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)\"}" 33m 33m 1 kube-dns-2139945439 ReplicaSet Normal SuccessfulDelete replicaset-controller Deleted pod: kube-dns-2139945439-6zqt3 25m 25m 1 kube-dns-2139945439 ReplicaSet Normal SuccessfulCreate replicaset-controller Created pod: kube-dns-2139945439-bg2fk 23m 23m 1 kube-dns-2139945439 ReplicaSet Normal SuccessfulDelete replicaset-controller Deleted pod: kube-dns-2139945439-bg2fk 22m 22m 1 kube-dns-2139945439 ReplicaSet Normal SuccessfulCreate replicaset-controller Created pod: kube-dns-2139945439-kp1v7 33m 33m 1 kube-dns Deployment Normal ScalingReplicaSet deployment-controller Scaled down replica set kube-dns-2139945439 to 0 25m 25m 1 kube-dns Deployment Normal ScalingReplicaSet deployment-controller Scaled up replica set kube-dns-2139945439 to 1 23m 23m 1 kube-dns Deployment Normal ScalingReplicaSet deployment-controller Scaled down replica set kube-dns-2139945439 to 0 22m 22m 1 kube-dns Deployment Normal ScalingReplicaSet deployment-controller Scaled up replica set kube-dns-2139945439 to 1 |

解决上述问题后,由于上述deployment文件里没有配置master url地址,导致kube dns 镜像无法启动,导致dnsmasq镜像总是liveness探测失败,修改配置后问题解决,当时的事件输出包含类似错误:

|

1 2 3 |

16m 17m 7 kube-dns-2139945439-tlnt0 Pod Warning FailedSync kubelet, 172.16.199.27 Error syncing pod, skipping: failed to "StartContainer" for "kubedns" with CrashLoopBackOff: "Back-off 1m20s restarting failed container=kubedns pod=kube-dns-2139945439-tlnt0_kube-system(5ae8de0b-64ca-11e7-ae06-000c29420d98)" 17m 17m 1 kube-dns-2139945439-tlnt0 Pod spec.containers{dnsmasq} Normal Killing kubelet, 172.16.199.27 Killing container with id docker://63aa8d879e66500e1be7c13426cdf2090cac34254df03822a76f97a36b256802:pod "kube-dns-2139945439-tlnt0_kube-system(5ae8de0b-64ca-11e7-ae06-000c29420d98)" container "dnsmasq" is unhealthy, it will be killed and re-created. |

文章评论

配置dns需要配置ssl吗

@渡河人 不需要

我用的是github上的yaml文件,但是运行controller文件时报错: Killing container with id docker://c57ee12790fe565365b13833177c14046373e169da19935fd0d40206862c6870:pod "kube-dns-1631782461-3dqvx_kube-system(78458c79-8bce-11e7-a72c-000c29e37283)" container "dnsmasq" is unhealthy, it will be killed and re-created

@渡河人 看上去 dnsmasq 的容器 liveness 探测失败。 可以跟踪下event事件信息以及容器本身。

livenessProbe:

httpGet:

path: /healthcheck/dnsmasq

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5