测试搭建一个使用kafka作为消息队列的ELK环境,数据采集转换实现结构如下:

F5 HSL-->logstash(流处理)--> kafka -->elasticsearch

测试中的elk版本为6.3, confluent版本是4.1.1

希望实现的效果是 HSL发送的日志胫骨logstash进行流处理后输出为json,该json类容原样直接保存到kafka中,kafka不再做其它方面的格式处理。

测试环境:

192.168.214.138: 安装 logstash,confluent环境

192.168.214.137: 安装ELK套件(停用logstash,只启动es和kibana)

confluent安装调试备忘:

-

- 像安装elk环境一样,安装java环境先

- 首先在不考虑kafka的情形下,实现F5 HSL---Logstash--ES的正常运行,并实现简单的正常kibana的展现。后面改用kafka时候直接将这里output修改为kafka plugin配置即可。

此时logstash的相关配置

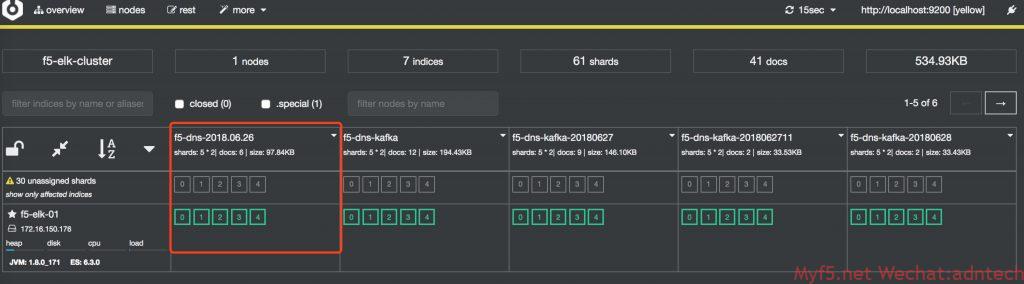

1234567891011121314151617181920212223242526272829303132333435input {udp {port => 8514type => 'f5-dns'}}filter {if [type] == 'f5-dns' {grok {match => { "message" => "%{HOSTNAME:F5hostname} %{IP:clientip} %{POSINT:clientport} %{IP:svrip} %{NUMBER:qid} %{HOSTNAME:qname} %{GREEDYDATA:qtype} %{GREEDYDATA:status} %{GREEDYDATA:origin}" }}geoip {source => "clientip"target => "geoip"}}}output {#stdout{ codec => rubydebug }#elasticsearch {# hosts => ["192.168.214.137:9200"]# index => "f5-dns-%{+YYYY.MM.dd}"#template_name => "f5-dns"#}kafka {codec => jsonbootstrap_servers => "localhost:9092"topic_id => "f5-dns-kafka"}} - 发一些测试流量,确认es正常收到数据,查看cerebro上显示的状态。(截图是调试完毕后截图)

cd /usr/share/cerebro/cerebro-0.8.1/;./bin/cerebro -Dhttp.port=9110 -Dhttp.address=0.0.0.0

- 安装confluent,由于是测试环境,直接confluent官方网站下载压缩包,解压后使用。位置在/root/confluent-4.1.1/下

- 由于是测试环境,直接用confluent的命令行来启动所有相关服务,发现kakfa启动失败

12345678[root@kafka-logstash bin]# ./confluent startUsing CONFLUENT_CURRENT: /tmp/confluent.dA0KYIWjStarting zookeeperzookeeper is [UP]Starting kafka/Kafka failed to startkafka is [DOWN]Cannot start Schema Registry, Kafka Server is not running. Check your deployment

检查发现由于虚机内存给太少了,导致java无法分配足够内存给kafka

12[root@kafka-logstash bin]# ./kafka-server-start ../etc/kafka/server.propertiesOpenJDK 64-Bit Server VM warning: INFO: os::commit_memory(0x00000000c0000000, 1073741824, 0) failed; error='Cannot allocate memory' (errno=12)

扩大虚拟机内存,并将logstash的jvm配置中设置的内存调小 - kafka server配置文件

123456789101112131415161718192021222324[root@kafka-logstash kafka]# pwd/root/confluent-4.1.1/etc/kafka[root@kafka-logstash kafka]# egrep -v "^#|^$" server.propertiesbroker.id=0listeners=PLAINTEXT://localhost:9092num.network.threads=3num.io.threads=8socket.send.buffer.bytes=102400socket.receive.buffer.bytes=102400socket.request.max.bytes=104857600log.dirs=/tmp/kafka-logsnum.partitions=1num.recovery.threads.per.data.dir=1offsets.topic.replication.factor=1transaction.state.log.replication.factor=1transaction.state.log.min.isr=1log.retention.hours=168log.segment.bytes=1073741824log.retention.check.interval.ms=300000zookeeper.connect=localhost:2181zookeeper.connection.timeout.ms=6000confluent.support.metrics.enable=trueconfluent.support.customer.id=anonymousgroup.initial.rebalance.delay.ms=0 - connect 配置文件,此配置中,将原来的avro converter替换成了json,同时关闭了key vlaue的schema识别。因为我们输入的内容是直接的json类容,没有相关schema,这里只是希望kafka原样解析logstash输出的json内容到es

123456789101112131415[root@kafka-logstash kafka]# pwd/root/confluent-4.1.1/etc/kafka[root@kafka-logstash kafka]# egrep -v "^#|^$" connect-standalone.propertiesbootstrap.servers=localhost:9092key.converter=org.apache.kafka.connect.json.JsonConvertervalue.converter=org.apache.kafka.connect.json.JsonConverterkey.converter.schemas.enable=falsevalue.converter.schemas.enable=falseinternal.key.converter=org.apache.kafka.connect.json.JsonConverterinternal.value.converter=org.apache.kafka.connect.json.JsonConverterinternal.key.converter.schemas.enable=falseinternal.value.converter.schemas.enable=falseoffset.storage.file.filename=/tmp/connect.offsetsoffset.flush.interval.ms=10000plugin.path=share/java

如果不做上述修改,connect总会在将日志sink到ES时提示无法反序列化,magic byte错误等。如果使用confluent status命令查看,会发现connect会从up变为down

1234567[root@kafka-logstash confluent-4.1.1]# ./bin/confluent statusksql-server is [DOWN]connect is [DOWN]kafka-rest is [UP]schema-registry is [UP]kafka is [UP]zookeeper is [UP]

- schema-registry 相关配置

1234567891011121314151617181920212223[root@kafka-logstash schema-registry]# pwd/root/confluent-4.1.1/etc/schema-registry[root@kafka-logstash schema-registry]# egrep -v "^#|^$"connect-avro-distributed.properties connect-avro-standalone.properties log4j.properties schema-registry.properties[root@kafka-logstash schema-registry]# egrep -v "^#|^$" connect-avro-standalone.propertiesbootstrap.servers=localhost:9092key.converter.schema.registry.url=http://localhost:8081value.converter.schema.registry.url=http://localhost:8081key.converter=org.apache.kafka.connect.json.JsonConvertervalue.converter=org.apache.kafka.connect.json.JsonConverterkey.converter.schemas.enable=falsevalue.converter.schemas.enable=falseinternal.key.converter=org.apache.kafka.connect.json.JsonConverterinternal.value.converter=org.apache.kafka.connect.json.JsonConverterinternal.key.converter.schemas.enable=falseinternal.value.converter.schemas.enable=falseoffset.storage.file.filename=/tmp/connect.offsetsplugin.path=share/java[root@kafka-logstash schema-registry]# egrep -v "^#|^$" schema-registry.propertieslisteners=http://0.0.0.0:8081kafkastore.connection.url=localhost:2181kafkastore.topic=_schemasdebug=false - es-connector的配置文件

12345678910111213141516[root@kafka-logstash kafka-connect-elasticsearch]# pwd/root/confluent-4.1.1/etc/kafka-connect-elasticsearch[root@kafka-logstash kafka-connect-elasticsearch]# egrep -v "^#|^$" quickstart-elasticsearch.propertiesname=f5-dnsconnector.class=io.confluent.connect.elasticsearch.ElasticsearchSinkConnectortasks.max=1topics=f5-dns-kafkakey.ignore=truevalue.ignore=trueschema.ignore=trueconnection.url=http://192.168.214.137:9200type.name=doctransforms=MyRoutertransforms.MyRouter.type=org.apache.kafka.connect.transforms.TimestampRoutertransforms.MyRouter.topic.format=${topic}-${timestamp}transforms.MyRouter.timestamp.format=yyyyMMdd

上述配置中topics配置是希望传输到ES的topic,通过设置transform的timestamp router来实现将topic按天动态映射为ES中的index,这样可以让ES每天产生一个index。注意需要配置schema.ignore=true,否则kafka无法将受收到的数据发送到ES上,connect的 connect.stdout 日志会显示:

12345678910[root@kafka-logstash connect]# pwd/tmp/confluent.dA0KYIWj/connectCaused by: org.apache.kafka.connect.errors.DataException: Cannot infer mapping without schema.at io.confluent.connect.elasticsearch.Mapping.inferMapping(Mapping.java:84)at io.confluent.connect.elasticsearch.jest.JestElasticsearchClient.createMapping(JestElasticsearchClient.java:221)at io.confluent.connect.elasticsearch.Mapping.createMapping(Mapping.java:66)at io.confluent.connect.elasticsearch.ElasticsearchWriter.write(ElasticsearchWriter.java:260)at io.confluent.connect.elasticsearch.ElasticsearchSinkTask.put(ElasticsearchSinkTask.java:162)at org.apache.kafka.connect.runtime.WorkerSinkTask.deliverMessages(WorkerSinkTask.java:524) - 配置修正完毕后,向logstash发送数据,发现日志已经可以正常发送到了ES上,且格式和没有kafka时是一致的。

没有kafka时:

12345678910111213141516171819202122232425262728293031323334353637383940{"_index": "f5-dns-2018.06.26","_type": "doc","_id": "KrddO2QBXB-i0ay0g5G9","_version": 1,"_score": 1,"_source": {"message": "localhost.lan 202.202.102.100 53777 172.16.199.136 42487 www.test.com A NOERROR GTM_REWRITE ","F5hostname": "localhost.lan","qid": "42487","clientip": "202.202.102.100","geoip": {"region_name": "Chongqing","location": {"lon": 106.5528,"lat": 29.5628},"country_code2": "CN","timezone": "Asia/Shanghai","country_name": "China","region_code": "50","continent_code": "AS","city_name": "Chongqing","country_code3": "CN","ip": "202.202.102.100","latitude": 29.5628,"longitude": 106.5528},"status": "NOERROR","qname": "www.test.com","clientport": "53777","@version": "1","@timestamp": "2018-06-26T09:12:21.585Z","host": "192.168.214.1","type": "f5-dns","qtype": "A","origin": "GTM_REWRITE ","svrip": "172.16.199.136"}}

有kafka时:

12345678910111213141516171819202122232425262728293031323334353637383940{"_index": "f5-dns-kafka-20180628","_type": "doc","_id": "f5-dns-kafka-20180628+0+23","_version": 1,"_score": 1,"_source": {"F5hostname": "localhost.lan","geoip": {"city_name": "Chongqing","timezone": "Asia/Shanghai","ip": "202.202.100.100","latitude": 29.5628,"country_name": "China","country_code2": "CN","continent_code": "AS","country_code3": "CN","region_name": "Chongqing","location": {"lon": 106.5528,"lat": 29.5628},"region_code": "50","longitude": 106.5528},"qtype": "A","origin": "DNSX ","type": "f5-dns","message": "localhost.lan 202.202.100.100 53777 172.16.199.136 42487 www.myf5.net A NOERROR DNSX ","qid": "42487","clientport": "53777","@timestamp": "2018-06-28T09:05:20.594Z","clientip": "202.202.100.100","qname": "www.myf5.net","host": "192.168.214.1","@version": "1","svrip": "172.16.199.136","status": "NOERROR"}}

- 相关REST API输出

12345678910111213141516171819202122232425262728293031323334353637383940414243444546474849505152535455565758596061626364656667686970http://192.168.214.138:8083/connectors/elasticsearch-sink/tasks[{"id": {"connector": "elasticsearch-sink","task": 0},"config": {"connector.class": "io.confluent.connect.elasticsearch.ElasticsearchSinkConnector","type.name": "doc","value.ignore": "true","tasks.max": "1","topics": "f5-dns-kafka","transforms.MyRouter.topic.format": "${topic}-${timestamp}","transforms": "MyRouter","key.ignore": "true","schema.ignore": "true","transforms.MyRouter.timestamp.format": "yyyyMMdd","task.class": "io.confluent.connect.elasticsearch.ElasticsearchSinkTask","name": "elasticsearch-sink","connection.url": "http://192.168.214.137:9200","transforms.MyRouter.type": "org.apache.kafka.connect.transforms.TimestampRouter"}}]http://192.168.214.138:8083/connectors/elasticsearch-sink/{"name": "elasticsearch-sink","config": {"connector.class": "io.confluent.connect.elasticsearch.ElasticsearchSinkConnector","type.name": "doc","value.ignore": "true","tasks.max": "1","topics": "f5-dns-kafka","transforms.MyRouter.topic.format": "${topic}-${timestamp}","transforms": "MyRouter","key.ignore": "true","schema.ignore": "true","transforms.MyRouter.timestamp.format": "yyyyMMdd","name": "elasticsearch-sink","connection.url": "http://192.168.214.137:9200","transforms.MyRouter.type": "org.apache.kafka.connect.transforms.TimestampRouter"},"tasks": [{"connector": "elasticsearch-sink","task": 0}],"type": "sink"}http://192.168.214.138:8083/connectors/elasticsearch-sink/status{"name": "elasticsearch-sink","connector": {"state": "RUNNING","worker_id": "172.16.150.179:8083"},"tasks": [{"state": "RUNNING","id": 0,"worker_id": "172.16.150.179:8083"}],"type": "sink"}

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566http://192.168.214.138:8082/brokers{"brokers": [0]}http://192.168.214.138:8082/topics["__confluent.support.metrics","_confluent-ksql-default__command_topic","_schemas","connect-configs","connect-offsets","connect-statuses","f5-dns-2018.06","f5-dns-2018.06.27","f5-dns-kafka","test-elasticsearch-sink"]http://192.168.214.138:8082/topics/f5-dns-kafka{"name": "f5-dns-kafka","configs": {"file.delete.delay.ms": "60000","segment.ms": "604800000","min.compaction.lag.ms": "0","retention.bytes": "-1","segment.index.bytes": "10485760","cleanup.policy": "delete","follower.replication.throttled.replicas": "","message.timestamp.difference.max.ms": "9223372036854775807","segment.jitter.ms": "0","preallocate": "false","segment.bytes": "1073741824","message.timestamp.type": "CreateTime","message.format.version": "1.1-IV0","max.message.bytes": "1000012","unclean.leader.election.enable": "false","retention.ms": "604800000","flush.ms": "9223372036854775807","delete.retention.ms": "86400000","leader.replication.throttled.replicas": "","min.insync.replicas": "1","flush.messages": "9223372036854775807","compression.type": "producer","min.cleanable.dirty.ratio": "0.5","index.interval.bytes": "4096"},"partitions": [{"partition": 0,"leader": 0,"replicas": [{"broker": 0,"leader": true,"in_sync": true}]}]}

测试中kafka的配置基本都为确实配置,没有考虑任何的内存优化,kafka使用磁盘的大小考虑等

测试参考:

https://docs.confluent.io/current/installation/installing_cp.html

https://docs.confluent.io/current/connect/connect-elasticsearch/docs/elasticsearch_connector.html

https://docs.confluent.io/current/connect/connect-elasticsearch/docs/configuration_options.html

存储机制参考 https://blog.csdn.net/opensure/article/details/46048589

kafka配置参数参考 https://blog.csdn.net/lizhitao/article/details/25667831

更多kafka原理 https://blog.csdn.net/ychenfeng/article/details/74980531

confluent CLI:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

confluent: A command line interface to manage Confluent services Usage: confluent <command> [<subcommand>] [<parameters>] These are the available commands: acl Specify acl for a service. config Configure a connector. current Get the path of the data and logs of the services managed by the current confluent run. destroy Delete the data and logs of the current confluent run. list List available services. load Load a connector. log Read or tail the log of a service. start Start all services or a specific service along with its dependencies status Get the status of all services or the status of a specific service along with its dependencies. stop Stop all services or a specific service along with the services depending on it. top Track resource usage of a service. unload Unload a connector. 'confluent help' lists available commands. See 'confluent help <command>' to read about a specific command. |

confluent platform 服务端口表

| Component | Default Port |

|---|---|

| Apache Kafka brokers (plain text) | 9092 |

| Confluent Control Center | 9021 |

| Kafka Connect REST API | 8083 |

| KSQL Server REST API | 8088 |

| REST Proxy | 8082 |

| Schema Registry REST API | 8081 |

| ZooKeeper | 2181 |

文章评论