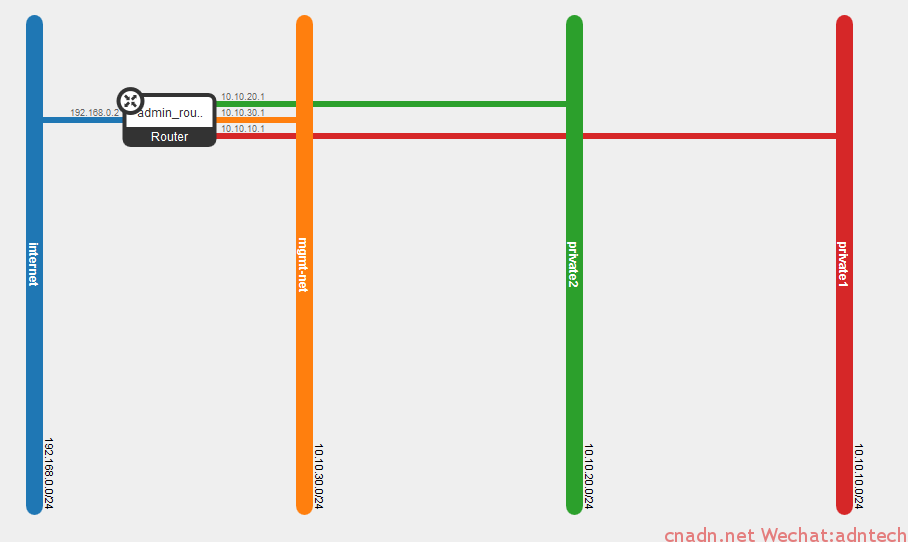

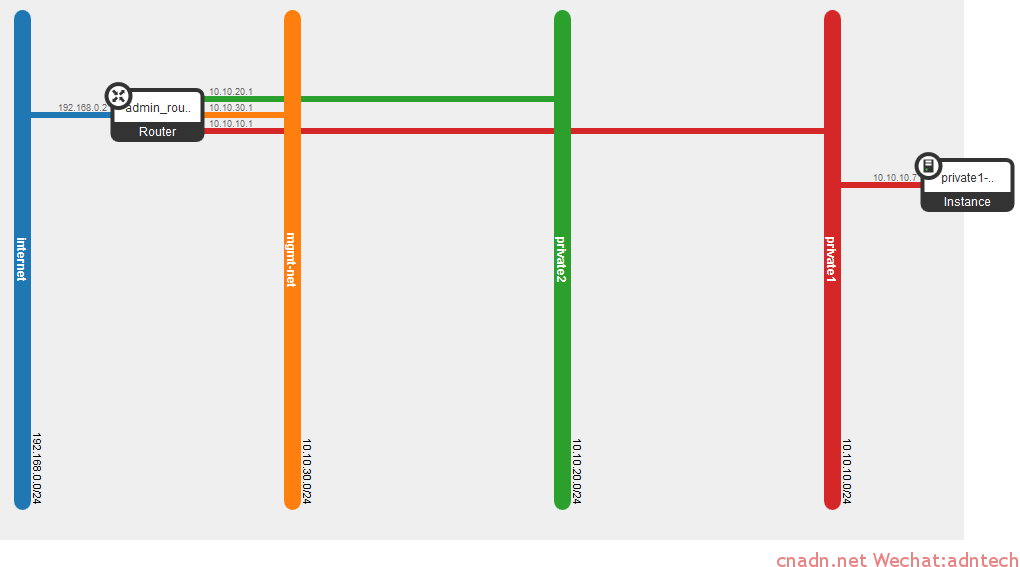

实验网络拓扑如下:

首先创建一个L3路由器,并给租户设置三个网络

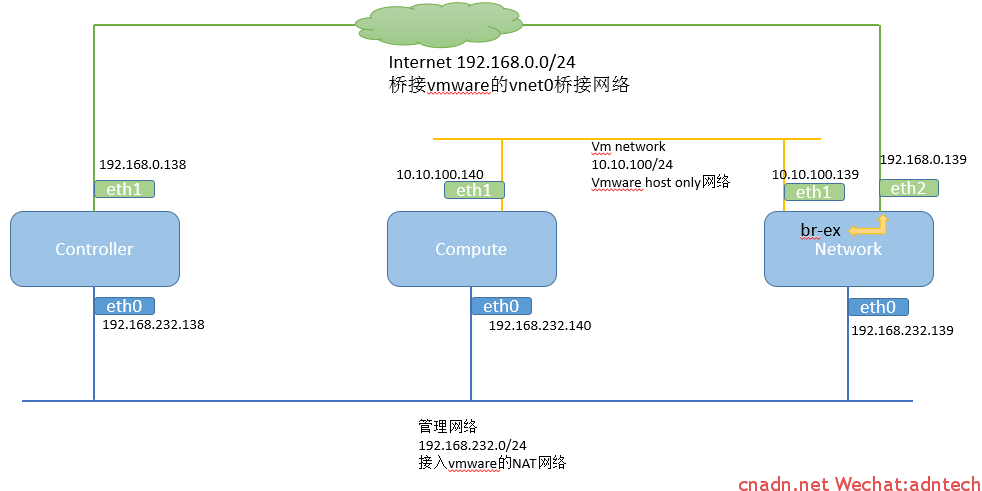

网络节点情况:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 |

root@network:/home/mycisco# ovs-vsctl show 909c85d0-ff4e-446b-bf8a-9166f0fccd24 Bridge br-int fail_mode: secure Port patch-tun Interface patch-tun type: patch options: {peer=patch-int} Port "tap78dd7f1e-53" tag: 9 Interface "tap78dd7f1e-53" type: internal Port "tap708ee330-0c" tag: 11 Interface "tap708ee330-0c" type: internal Port "taped034aae-11" tag: 8 Interface "taped034aae-11" type: internal Port "qr-d8fd27a6-d1" tag: 9 Interface "qr-d8fd27a6-d1" type: internal Port "qr-09208a89-0b" tag: 8 Interface "qr-09208a89-0b" type: internal Port "qr-4debe62b-e4" tag: 11 Interface "qr-4debe62b-e4" type: internal Port br-int Interface br-int type: internal Bridge br-tun Port patch-int Interface patch-int type: patch options: {peer=patch-tun} Port "gre-0a0a648c" Interface "gre-0a0a648c" type: gre options: {in_key=flow, local_ip="10.10.100.139", out_key=flow, remote_ip="10.10.100.140"} Port br-tun Interface br-tun type: internal Bridge br-ex Port "eth2" Interface "eth2" Port br-ex Interface br-ex type: internal Port "qg-6f726a6c-41" Interface "qg-6f726a6c-41" type: internal ovs_version: "2.0.2" |

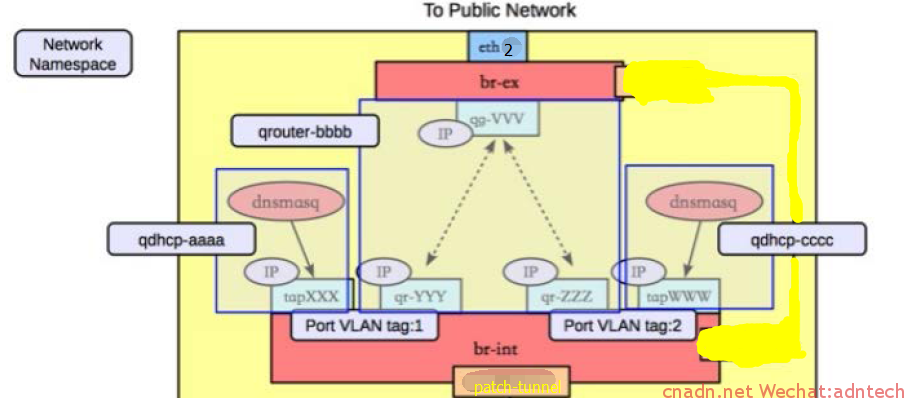

在br-int中,tap*为DHCP的接口, qr为租户的路由器接口,目前只有一个租户,可以看出该L3路由器上有三个网络,实际的网络拓扑结构如下:

此时虚拟路由器上实际有4个接口,可以理解为br-int交换是租户私人网的内部交换机划分了三个独立vlan,每个vlan都有一个接口和虚拟路由器相连。

路由器左边的蓝色网络可以理解为provide网络,路由器通过接入br-ex使得路由器的蓝色网络与实际物理接口eth2桥接:

|

1 2 3 4 5 6 7 8 9 |

Bridge br-ex Port "eth2" Interface "eth2" Port br-ex Interface br-ex type: internal Port "qg-6f726a6c-41" Interface "qg-6f726a6c-41" type: internal |

事实上,这些网络的隔离是依靠namespace来进行的:

|

1 2 3 4 5 |

root@network:/home/mycisco# ip netns show qdhcp-224f79e0-8068-4ef7-8c79-2326bc0cf5c4 qdhcp-70f7aa46-b66b-455e-896e-05f94a08fcb8 qrouter-040c1455-6096-4806-ba91-fec64cdaed81 qdhcp-4e3f621f-d6b9-4438-be58-aa51d3c2e061 |

每个网络都有一个自己的ns,路由器也有一个自己的ns,功能上这就类似于F5的route domain,存在一个上下文关系。

在网络节点内部dhcp接口以及namespace之间关系如下:

.计算节点情况:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

root@compute:/home/mycisco# ovs-vsctl show b5553502-95e4-4ad2-90a6-a3da02d3819d Bridge br-tun Port br-tun Interface br-tun type: internal Port patch-int Interface patch-int type: patch options: {peer=patch-tun} Port "gre-0a0a648b" Interface "gre-0a0a648b" type: gre options: {in_key=flow, local_ip="10.10.100.140", out_key=flow, remote_ip="10.10.100.139"} Bridge br-int fail_mode: secure Port patch-tun Interface patch-tun type: patch options: {peer=patch-int} Port br-int Interface br-int type: internal ovs_version: "2.0.2" |

此时计算节点还没有启动instance,因此也就没有相关vm的interface, 此时计算节点的hypervisor上网络接口情况如下:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 |

root@compute:/home/mycisco# ifconfig br-int Link encap:Ethernet HWaddr d2:2d:3d:fc:72:44 inet6 addr: fe80::b406:33ff:fea3:293c/64 Scope:Link UP BROADCAST RUNNING MTU:1500 Metric:1 RX packets:41 errors:0 dropped:0 overruns:0 frame:0 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:3258 (3.2 KB) TX bytes:648 (648.0 B) br-tun Link encap:Ethernet HWaddr 2a:fd:e3:83:b9:4f inet6 addr: fe80::a8f6:49ff:fe0d:5dd5/64 Scope:Link UP BROADCAST RUNNING MTU:1500 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0.0 B) TX bytes:648 (648.0 B) eth0 Link encap:Ethernet HWaddr 00:0c:29:07:13:bf inet addr:192.168.232.140 Bcast:192.168.232.255 Mask:255.255.255.0 inet6 addr: fe80::20c:29ff:fe07:13bf/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:270661 errors:0 dropped:0 overruns:0 frame:0 TX packets:307615 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:67530181 (67.5 MB) TX bytes:115865767 (115.8 MB) eth1 Link encap:Ethernet HWaddr 00:0c:29:07:13:c9 inet addr:10.10.100.140 Bcast:10.10.100.255 Mask:255.255.255.0 inet6 addr: fe80::20c:29ff:fe07:13c9/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:19723 errors:0 dropped:0 overruns:0 frame:0 TX packets:26 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:1859485 (1.8 MB) TX bytes:2662 (2.6 KB) Interrupt:16 Base address:0x2000 lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host UP LOOPBACK RUNNING MTU:65536 Metric:1 RX packets:3 errors:0 dropped:0 overruns:0 frame:0 TX packets:3 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:333 (333.0 B) TX bytes:333 (333.0 B) virbr0 Link encap:Ethernet HWaddr 6a:cc:bc:f5:91:be inet addr:192.168.122.1 Bcast:192.168.122.255 Mask:255.255.255.0 UP BROADCAST MULTICAST MTU:1500 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B) |

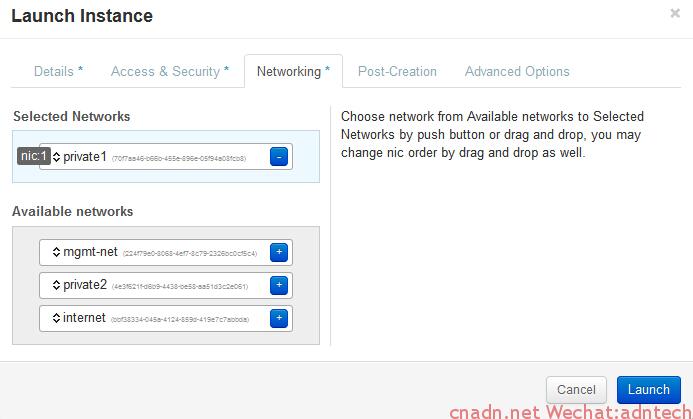

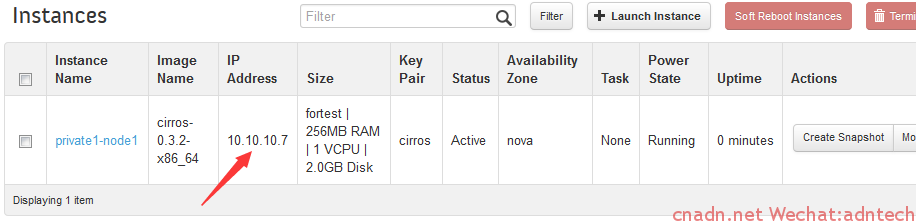

下面,在网络private1网络中启动一个实例:

实例自动获得10.10.10.7IP地址,此时网络拓扑如下:

进入该实例查看:

可以看出该实例通过dhcp自动获取了ip地址以及缺省网关

在该实例中ping网关,以及ping路由器的外部网络接口都可以ping,说明路由器工作正常。

此时计算节点hypervisor上,网络变化为:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 |

root@compute:/home/mycisco# ifconfig br-int Link encap:Ethernet HWaddr d2:2d:3d:fc:72:44 inet6 addr: fe80::b406:33ff:fea3:293c/64 Scope:Link UP BROADCAST RUNNING MTU:1500 Metric:1 RX packets:64 errors:0 dropped:0 overruns:0 frame:0 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:5506 (5.5 KB) TX bytes:648 (648.0 B) br-tun Link encap:Ethernet HWaddr 2a:fd:e3:83:b9:4f inet6 addr: fe80::a8f6:49ff:fe0d:5dd5/64 Scope:Link UP BROADCAST RUNNING MTU:1500 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0.0 B) TX bytes:648 (648.0 B) eth0 Link encap:Ethernet HWaddr 00:0c:29:07:13:bf inet addr:192.168.232.140 Bcast:192.168.232.255 Mask:255.255.255.0 inet6 addr: fe80::20c:29ff:fe07:13bf/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:285451 errors:0 dropped:0 overruns:0 frame:0 TX packets:320461 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:70009923 (70.0 MB) TX bytes:118909346 (118.9 MB) eth1 Link encap:Ethernet HWaddr 00:0c:29:07:13:c9 inet addr:10.10.100.140 Bcast:10.10.100.255 Mask:255.255.255.0 inet6 addr: fe80::20c:29ff:fe07:13c9/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:22244 errors:0 dropped:0 overruns:0 frame:0 TX packets:181 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:2101857 (2.1 MB) TX bytes:23438 (23.4 KB) Interrupt:16 Base address:0x2000 lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host UP LOOPBACK RUNNING MTU:65536 Metric:1 RX packets:3 errors:0 dropped:0 overruns:0 frame:0 TX packets:3 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:333 (333.0 B) TX bytes:333 (333.0 B) qbr8cbe7bb6-18 Link encap:Ethernet HWaddr 1a:8e:57:f2:7d:11 inet6 addr: fe80::f858:8cff:fe4e:dfcb/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:16 errors:0 dropped:0 overruns:0 frame:0 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:1466 (1.4 KB) TX bytes:648 (648.0 B) qvb8cbe7bb6-18 Link encap:Ethernet HWaddr 1a:8e:57:f2:7d:11 inet6 addr: fe80::188e:57ff:fef2:7d11/64 Scope:Link UP BROADCAST RUNNING PROMISC MULTICAST MTU:1500 Metric:1 RX packets:106 errors:0 dropped:0 overruns:0 frame:0 TX packets:159 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:14872 (14.8 KB) TX bytes:15102 (15.1 KB) qvo8cbe7bb6-18 Link encap:Ethernet HWaddr a6:98:52:ef:b1:0e inet6 addr: fe80::a498:52ff:feef:b10e/64 Scope:Link UP BROADCAST RUNNING PROMISC MULTICAST MTU:1500 Metric:1 RX packets:159 errors:0 dropped:0 overruns:0 frame:0 TX packets:106 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:15102 (15.1 KB) TX bytes:14872 (14.8 KB) tap8cbe7bb6-18 Link encap:Ethernet HWaddr fe:16:3e:32:28:af inet6 addr: fe80::fc16:3eff:fe32:28af/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:144 errors:0 dropped:0 overruns:0 frame:0 TX packets:113 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:500 RX bytes:13896 (13.8 KB) TX bytes:15422 (15.4 KB) virbr0 Link encap:Ethernet HWaddr 6a:cc:bc:f5:91:be inet addr:192.168.122.1 Bcast:192.168.122.255 Mask:255.255.255.0 UP BROADCAST MULTICAST MTU:1500 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B) |

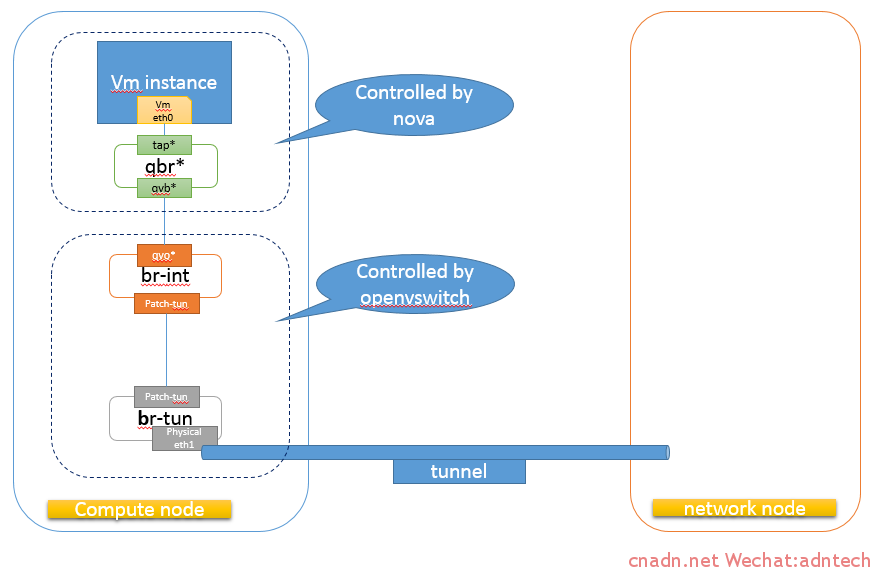

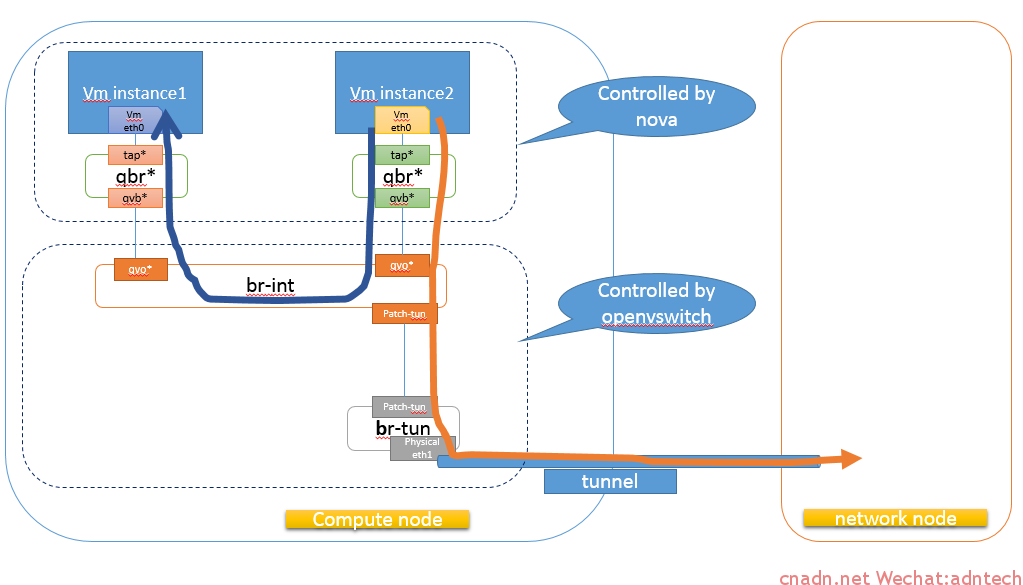

可以看出,hypervisor的网络中多出了多个网络设备qbr***,qvb***,qvo***,tap****, 那么这些设备接口是如何将一个vm与物理网络(provide网络)对接起来的?

首先查看下多出来的虚拟网桥包含什么:

|

1 2 3 4 5 |

root@compute:/home/mycisco# brctl show bridge name bridge id STP enabled interfaces <span style="color: #ff0000;">qbr8cbe7bb6-18 8000.1a8e57f27d11 no qvb8cbe7bb6-18 tap8cbe7bb6-18</span> virbr0 8000.000000000000 yes |

qbr**虚拟网桥包含了两个接口,分别是 qvb**和tap***,tap***代表虚机实例的网卡,它和qvb***处于同一个二层网络,那么需要看看qvb***接口是连接到了哪里:

|

1 2 3 |

root@compute:/home/mycisco# ethtool -S qvb8cbe7bb6-18 NIC statistics: peer_ifindex: 19 |

该接口和一个编号索引为19的接口做了link。 来看看这个19接口是什么:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 |

root@compute:/home/mycisco# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:07:13:bf brd ff:ff:ff:ff:ff:ff inet 192.168.232.140/24 brd 192.168.232.255 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fe07:13bf/64 scope link valid_lft forever preferred_lft forever 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN group default qlen 1000 link/ether 00:0c:29:07:13:c9 brd ff:ff:ff:ff:ff:ff inet 10.10.100.140/24 brd 10.10.100.255 scope global eth1 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fe07:13c9/64 scope link valid_lft forever preferred_lft forever 4: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default link/ether e6:c2:4f:85:74:3b brd ff:ff:ff:ff:ff:ff 5: br-int: <BROADCAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default link/ether d2:2d:3d:fc:72:44 brd ff:ff:ff:ff:ff:ff inet6 fe80::b406:33ff:fea3:293c/64 scope link valid_lft forever preferred_lft forever 7: br-tun: <BROADCAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default link/ether 2a:fd:e3:83:b9:4f brd ff:ff:ff:ff:ff:ff inet6 fe80::a8f6:49ff:fe0d:5dd5/64 scope link valid_lft forever preferred_lft forever 8: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 6a:cc:bc:f5:91:be brd ff:ff:ff:ff:ff:ff inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0 valid_lft forever preferred_lft forever 18: qbr8cbe7bb6-18: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 1a:8e:57:f2:7d:11 brd ff:ff:ff:ff:ff:ff inet6 fe80::f858:8cff:fe4e:dfcb/64 scope link valid_lft forever preferred_lft forever 19: qvo8cbe7bb6-18: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master ovs-system state UP group default qlen 1000 link/ether a6:98:52:ef:b1:0e brd ff:ff:ff:ff:ff:ff inet6 fe80::a498:52ff:feef:b10e/64 scope link valid_lft forever preferred_lft forever 20: qvb8cbe7bb6-18: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master qbr8cbe7bb6-18 state UP group default qlen 1000 link/ether 1a:8e:57:f2:7d:11 brd ff:ff:ff:ff:ff:ff inet6 fe80::188e:57ff:fef2:7d11/64 scope link valid_lft forever preferred_lft forever 21: tap8cbe7bb6-18: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master qbr8cbe7bb6-18 state UNKNOWN group default qlen 500 link/ether fe:16:3e:32:28:af brd ff:ff:ff:ff:ff:ff inet6 fe80::fc16:3eff:fe32:28af/64 scope link valid_lft forever preferred_lft forever |

从输出中可以看到编号19是一个叫qvo8cbe7bb6-18的接口,该接口又位于哪?

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

root@compute:/home/mycisco# ovs-vsctl show b5553502-95e4-4ad2-90a6-a3da02d3819d Bridge br-tun Port br-tun Interface br-tun type: internal Port patch-int Interface patch-int type: patch options: {peer=patch-tun} Port "gre-0a0a648b" Interface "gre-0a0a648b" type: gre options: {in_key=flow, local_ip="10.10.100.140", out_key=flow, remote_ip="10.10.100.139"} Bridge br-int fail_mode: secure Port patch-tun Interface patch-tun type: patch options: {peer=patch-int} Port "qvo8cbe7bb6-18" tag: 4 Interface "qvo8cbe7bb6-18" Port br-int Interface br-int type: internal ovs_version: "2.0.2 |

可以看到该qvo接口位于br-int网桥中。br-int网桥通过patch-tun和br-tun网桥连接,而br-tun网桥通过gre隧道接口gre-0a0a648b实现了通过底层物理接口(10.10.100.140)与外界的通信。

至此可以看到该vm通过如下路径实现了和外界的通信:

在本网络中,各个namespace的dhcp服务器都运行在network节点上,那么compute几点上的一个vm到底是如何通过2层网络与dhcp服务器通信的,network节点是如何知道该vm的接口是应该和哪个网络相通的,在horizon上,这是通过将某个网络关联到某个vm上,但是如果从底层来看,是如何在隧道中区分不同网络的。

首先查看网络节点中的openflow:

|

8 9 10 11 12 13 14 15 16 |

cookie=0x0, duration=128769.413s, table=2, n_packets=564, n_bytes=53473, idle_age=0, hard_age=65534, priority=1,tun_id=0x1 actions=mod_vlan_vid:9,resubmit(,10) cookie=0x0, duration=120432.221s, table=2, n_packets=19, n_bytes=1398, idle_age=65534, hard_age=65534, priority=1,tun_id=0x4 actions=mod_vlan_vid:11,resubmit(,10) cookie=0x0, duration=128779.008s, table=2, n_packets=197, n_bytes=18294, idle_age=65534, hard_age=65534, priority=1,tun_id=0x2 actions=mod_vlan_vid:8,resubmit(,10) cookie=0x0, duration=143526.387s, table=2, n_packets=33, n_bytes=2526, idle_age=65534, hard_age=65534, priority=0 actions=drop cookie=0x0, duration=143526.346s, table=3, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=drop cookie=0x0, duration=143526.302s, table=10, n_packets=1495, n_bytes=142220, idle_age=0, hard_age=65534, priority=1 actions=learn(table=20,hard_timeout=300,priority=1,NXM_OF_VLAN_TCI[0..11],NXM_OF_ETH_DST[]=NXM_OF_ETH_SRC[],load:0->NXM_OF_VLAN_TCI[],load:NXM_NX_TUN_ID[]->NXM_NX_TUN_ID[],output:NXM_OF_IN_PORT[]),output:1 cookie=0x0, duration=235.239s, table=20, n_packets=251, n_bytes=23702, hard_timeout=300, idle_age=0, hard_age=0, priority=1,vlan_tci=0x0009/0x0fff,dl_dst=fa:16:3e:32:28:af actions=load:0->NXM_OF_VLAN_TCI[],load:0x1->NXM_NX_TUN_ID[],output:2 cookie=0x0, duration=143526.245s, table=20, n_packets=1, n_bytes=98, idle_age=65534, hard_age=65534, priority=0 actions=resubmit(,21) cookie=0x0, duration=120432.312s, table=21, n_packets=4, n_bytes=280, idle_age=65534, hard_age=65534, dl_vlan=11 actions=strip_vlan,set_tunnel:0x4,output:2 |

网络节点自动的为各个namespace的网络创建了对应的 tunnel (不同的tunnel id,这里分别是1,2,4)

再看计算节点的open flow:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

root@compute:/home/mycisco# ovs-ofctl dump-flows br-tun NXST_FLOW reply (xid=0x4): cookie=0x0, duration=76160.403s, table=0, n_packets=289, n_bytes=26654, idle_age=0, hard_age=65534, priority=1,in_port=1 actions=resubmit(,1) cookie=0x0, duration=76159.597s, table=0, n_packets=186, n_bytes=22456, idle_age=0, hard_age=65534, priority=1,in_port=2 actions=resubmit(,2) cookie=0x0, duration=76160.361s, table=0, n_packets=6, n_bytes=468, idle_age=65534, hard_age=65534, priority=0 actions=drop cookie=0x0, duration=76160.294s, table=1, n_packets=221, n_bytes=20876, idle_age=0, hard_age=65534, priority=1,dl_dst=00:00:00:00:00:00/01:00:00:00:00:00 actions=resubmit(,20) cookie=0x0, duration=76160.236s, table=1, n_packets=68, n_bytes=5778, idle_age=80, hard_age=65534, priority=1,dl_dst=01:00:00:00:00:00/01:00:00:00:00:00 actions=resubmit(,21) cookie=0x0, duration=3669.216s, table=2, n_packets=186, n_bytes=22456, idle_age=0, priority=1,tun_id=0x1 actions=mod_vlan_vid:4,resubmit(,10) cookie=0x0, duration=76160.193s, table=2, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=drop cookie=0x0, duration=76160.156s, table=3, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=drop cookie=0x0, duration=76160.11s, table=10, n_packets=186, n_bytes=22456, idle_age=0, hard_age=65534, priority=1 actions=learn(table=20,hard_timeout=300,priority=1,NXM_OF_VLAN_TCI[0..11],NXM_OF_ETH_DST[]=NXM_OF_ETH_SRC[],load:0->NXM_OF_VLAN_TCI[],load:NXM_NX_TUN_ID[]->NXM_NX_TUN_ID[],output:NXM_OF_IN_PORT[]),output:1 cookie=0x0, duration=80.351s, table=20, n_packets=87, n_bytes=8190, hard_timeout=300, idle_age=0, hard_age=0, priority=1,vlan_tci=0x0004/0x0fff,dl_dst=fa:16:3e:2a:46:e0 actions=load:0->NXM_OF_VLAN_TCI[],load:0x1->NXM_NX_TUN_ID[],output:2 cookie=0x0, duration=76160.068s, table=20, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=resubmit(,21) cookie=0x0, duration=3669.335s, table=21, n_packets=20, n_bytes=1962, idle_age=80, dl_vlan=4 actions=strip_vlan,set_tunnel:0x1,output:2 cookie=0x0, duration=76160.019s, table=21, n_packets=32, n_bytes=2616, idle_age=3669, hard_age=65534, priority=0 actions=drop |

可以看出当计算节点的br-tun桥将某个tunnel ID与某个vlan ID进行了关联,当数据包从br-int收到tag=4的数据包会剔除相关vlan id,并将其送入对应的gre tunnel里,网络节点从tunnel中收到该数据包后,根据自己这边的tunnel id与vlan id的对应关系找到正确的vlan id,并将其封装并送回给br-int。在本例中 计算节点一侧虚机网卡位于vlan 4中:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

root@compute:/home/mycisco# ovs-vsctl show b5553502-95e4-4ad2-90a6-a3da02d3819d Bridge br-tun Port br-tun Interface br-tun type: internal Port patch-int Interface patch-int type: patch options: {peer=patch-tun} Port "gre-0a0a648b" Interface "gre-0a0a648b" type: gre options: {in_key=flow, local_ip="10.10.100.140", out_key=flow, remote_ip="10.10.100.139"} Bridge br-int fail_mode: secure Port patch-tun Interface patch-tun type: patch options: {peer=patch-int} Port "qvo8cbe7bb6-18" tag: 4 Interface "qvo8cbe7bb6-18" Port br-int Interface br-int type: internal ovs_version: "2.0.2" |

而在网络节点中,相关网络位于vlan id 9, 因此这就像使用一个gre隧道将两个不同vlan直接互联起来一样,虽然位于不同vlan,但实际上是普通的2层,无tag:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

root@network:/home/mycisco# ovs-vsctl show 909c85d0-ff4e-446b-bf8a-9166f0fccd24 Bridge br-int fail_mode: secure Port patch-tun Interface patch-tun type: patch options: {peer=patch-int} Port "tap78dd7f1e-53" tag: 9 Interface "tap78dd7f1e-53" type: internal Port "tap708ee330-0c" tag: 11 Interface "tap708ee330-0c" type: internal Port "taped034aae-11" tag: 8 Interface "taped034aae-11" type: internal Port "qr-d8fd27a6-d1" tag: 9 Interface "qr-d8fd27a6-d1" type: internal Port "qr-09208a89-0b" tag: 8 Interface "qr-09208a89-0b" type: internal Port "qr-4debe62b-e4" tag: 11 Interface "qr-4debe62b-e4" type: internal Port br-int Interface br-int type: internal |

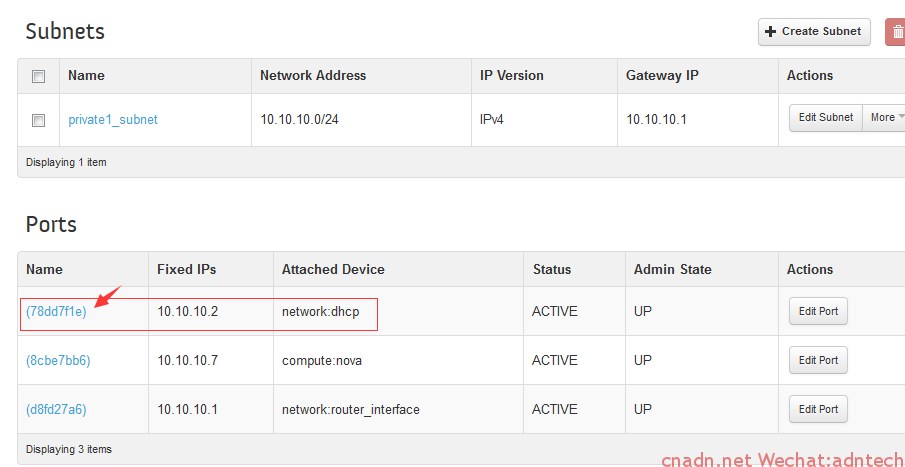

上述tap设备对应下图中的dhcp端口

观察一下计算节点的open flow,在实例中ping网关,出现:

|

1 |

cookie=0x0, duration=151.529s, table=20, n_packets=18, n_bytes=1540, hard_timeout=300, idle_age=30, hard_age=29, priority=1,vlan_tci=0x0004/0x0fff,dl_dst=fa:16:3e:2a:46:e0 actions=load:0->NXM_OF_VLAN_TCI[],load:0x1->NXM_NX_TUN_ID[],output:2 |

可以看到上述条目中目标mac是对端网关的mac:

如果此时,你在同一个计算节点上开启同一网络下的另一个实例并ping相同的网关,open flow中并不会多出一条,因为此时是同一个目标mac。

若此时在同一个实例中,ping另一个ip(dhcp server)的话,会看到多出一条:

|

1 2 |

cookie=0x0, duration=151.529s, table=20, n_packets=18, n_bytes=1540, hard_timeout=300, idle_age=30, hard_age=29, priority=1,vlan_tci=0x0004/0x0fff,dl_dst=fa:16:3e:2a:46:e0 actions=load:0->NXM_OF_VLAN_TCI[],load:0x1->NXM_NX_TUN_ID[],output:2 cookie=0x0, duration=2.623s, table=20, n_packets=3, n_bytes=294, hard_timeout=300, idle_age=0, hard_age=0, priority=1,vlan_tci=0x0004/0x0fff,dl_dst=fa:16:3e:3c:54:50 actions=load:0->NXM_OF_VLAN_TCI[],load:0x1->NXM_NX_TUN_ID[],output:2 |

多出这一条的目的mac则对应于该网络中的dhcp服务器mac(10.10.10.2 IP对应的mac)

如果在同一个租户的同一个网络内部署两台虚机的话,则结构如下,计算节点的hypervisor对每个虚机实例都创建一个qbr**网桥:

上图中,两个实例位于同一网络,因此在br-int中,两个接口属于同一个vlan,同vlan之间通信直接通过br-int完成:

Bridge br-int

fail_mode: secure

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

Port "qvo8cbe7bb6-18"

tag: 4

Interface "qvo8cbe7bb6-18"

Port br-int

Interface br-int

type: internal

Port "qvodd2c3906-f8"

tag: 4

Interface "qvodd2c3906-f8"

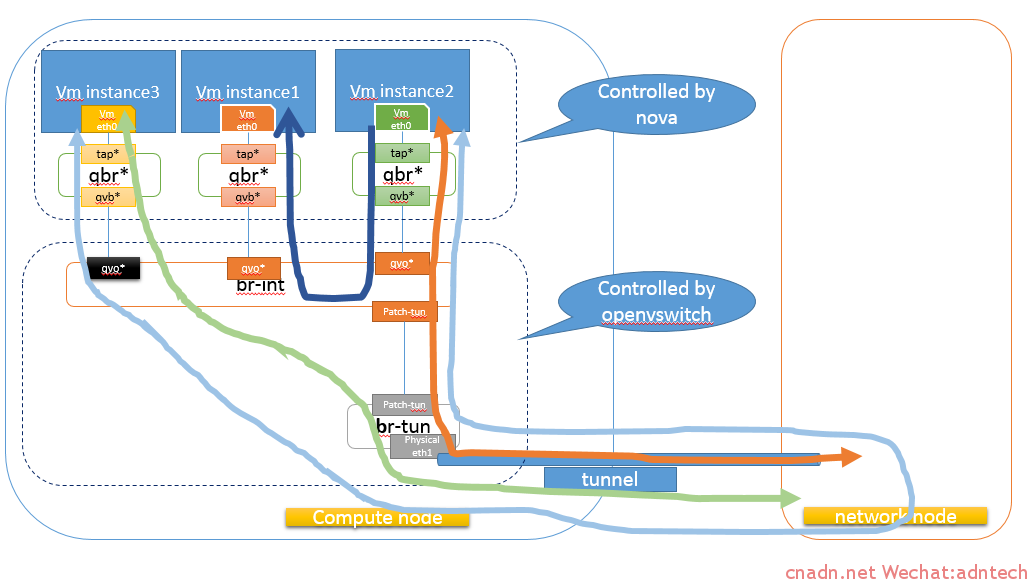

如果在同一个计算节点中再增加另一个网络的虚机实例的话,则彼此间数据流如下:

instance1和instance3处于不同的网络中,他们之间通信需要依靠网络节点进行路由,通过网络节点的open flow可以看出这一点:

|

1 2 |

cookie=0x0, duration=5.791s, table=20, n_packets=8, n_bytes=672, hard_timeout=300, idle_age=0, hard_age=0, priority=1,vlan_tci=0x0008/0x0fff,dl_dst=fa:16:3e:cb:91:43 actions=load:0->NXM_OF_VLAN_TCI[],load:0x2->NXM_NX_TUN_ID[],output:2 cookie=0x0, duration=5.785s, table=20, n_packets=6, n_bytes=532, hard_timeout=300, idle_age=0, hard_age=0, priority=1,vlan_tci=0x0009/0x0fff,dl_dst=fa:16:3e:53:9b:3b actions=load:0->NXM_OF_VLAN_TCI[],load:0x1->NXM_NX_TUN_ID[],output:2 |

此时,计算节点上的open flow为:

|

1 2 |

cookie=0x0, duration=9.893s, table=20, n_packets=11, n_bytes=1022, hard_timeout=300, idle_age=0, hard_age=0, priority=1,vlan_tci=0x0004/0x0fff,dl_dst=fa:16:3e:2a:46:e0 actions=load:0->NXM_OF_VLAN_TCI[],load:0x1->NXM_NX_TUN_ID[],output:2 cookie=0x0, duration=9.895s, table=20, n_packets=11, n_bytes=1022, hard_timeout=300, idle_age=0, hard_age=0, priority=1,vlan_tci=0x0005/0x0fff,dl_dst=fa:16:3e:79:45:64 actions=load:0->NXM_OF_VLAN_TCI[],load:0x2->NXM_NX_TUN_ID[],output:2 |

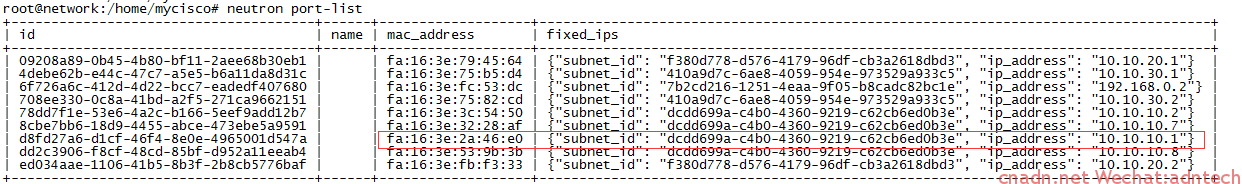

可以利用上面的MAC,对应下表的IP结合起来看:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

root@network:/home/mycisco# neutron port-list +--------------------------------------+------+-------------------+------------------------------------------------------------------------------------+ | id | name | mac_address | fixed_ips | +--------------------------------------+------+-------------------+------------------------------------------------------------------------------------+ | 09208a89-0b45-4b80-bf11-2aee68b30eb1 | | fa:16:3e:79:45:64 | {"subnet_id": "f380d778-d576-4179-96df-cb3a2618dbd3", "ip_address": "10.10.20.1"} | | 4debe62b-e44c-47c7-a5e5-b6a11da8d31c | | fa:16:3e:75:b5:d4 | {"subnet_id": "410a9d7c-6ae8-4059-954e-973529a933c5", "ip_address": "10.10.30.1"} | | 6f726a6c-412d-4d22-bcc7-eadedf407680 | | fa:16:3e:fc:53:dc | {"subnet_id": "7b2cd216-1251-4eaa-9f05-b8cadc82bc1e", "ip_address": "192.168.0.2"} | | 708ee330-0c8a-41bd-a2f5-271ca9662151 | | fa:16:3e:75:82:cd | {"subnet_id": "410a9d7c-6ae8-4059-954e-973529a933c5", "ip_address": "10.10.30.2"} | | 78dd7f1e-53e6-4a2c-b166-5eef9add12b7 | | fa:16:3e:3c:54:50 | {"subnet_id": "dcdd699a-c4b0-4360-9219-c62cb6ed0b3e", "ip_address": "10.10.10.2"} | | 8cbe7bb6-18d9-4455-abce-473ebe5a9591 | | fa:16:3e:32:28:af | {"subnet_id": "dcdd699a-c4b0-4360-9219-c62cb6ed0b3e", "ip_address": "10.10.10.7"} | | a6f975bb-605b-4f36-ad3f-669ccdab5fe5 | | fa:16:3e:cb:91:43 | {"subnet_id": "f380d778-d576-4179-96df-cb3a2618dbd3", "ip_address": "10.10.20.7"} | | d8fd27a6-d1cf-46f4-8e0e-4965001d547a | | fa:16:3e:2a:46:e0 | {"subnet_id": "dcdd699a-c4b0-4360-9219-c62cb6ed0b3e", "ip_address": "10.10.10.1"} | | dd2c3906-f8cf-48cd-85bf-d952a11eeab4 | | fa:16:3e:53:9b:3b | {"subnet_id": "dcdd699a-c4b0-4360-9219-c62cb6ed0b3e", "ip_address": "10.10.10.8"} | | ed034aae-1106-41b5-8b3f-2b8cb5776baf | | fa:16:3e:fb:f3:33 | {"subnet_id": "f380d778-d576-4179-96df-cb3a2618dbd3", "ip_address": "10.10.20.2"} | +--------------------------------------+------+-------------------+------------------------------------------------------------------------------------+ |

最后,模拟从网络节点中的虚拟路由器上的external网络接口模拟ping 虚拟机:

root@network:/home/mycisco# ip netns exec qrouter-040c1455-6096-4806-ba91-fec64cdaed81 ping -I 192.168.0.2 10.10.10.7

PING 10.10.10.7 (10.10.10.7) from 192.168.0.2 : 56(84) bytes of data.

64 bytes from 10.10.10.7: icmp_seq=1 ttl=64 time=5.22 ms

64 bytes from 10.10.10.7: icmp_seq=2 ttl=64 time=6.31 ms

64 bytes from 10.10.10.7: icmp_seq=3 ttl=64 time=1.32 ms

64 bytes from 10.10.10.7: icmp_seq=4 ttl=64 time=1.33 ms

64 bytes from 10.10.10.7: icmp_seq=5 ttl=64 time=1.05 ms

![G}XRA1SRC{H[8]JBD6[[BMR](http://www.cnadn.net/upload/2014/12/GXRA1SRCH8JBD6BMR.jpg)

![FL$151LAH6N@[TAT]}`_0(1](http://www.cnadn.net/upload/2014/12/FL151LAH6N@TAT_01.jpg)

文章评论