[title]CC简介[/title]

前面安装部署了k8s集群环境,看上去工作正常,下一步在k8s内安装F5公司发布的container connector,也叫F5 CC。CC是一个开源的F5产品,主要目的是通过在k8s集群内运行一个k8s-bigip-ctlr pod实例,通过定义一个config map实现将k8s的service或者pod配置到F5 BIGIP产品上,实现集群环境内业务南北向流量的外部发布,从而实现对业务的对外发布并充分利用F5 BIGIP产品的丰富功能,例如LB,SSL,WAF,火墙,抗DDOS等。

CC通过监控相关API接口获取信息,根据定义的标签为F5type: virtual-server Configmap定义文件通过F5 BIGIP的RESTful接口对F5 BIGIP进行自动化配置。k8s内部的service的变化可以自动化的反应到BIGIP的配置上,例如service的增改删,扩容缩减等。

具体cc产品,可参考官方文档http://clouddocs.f5.com/products/connectors/k8s-bigip-ctlr/latest/

[title]基于已安装好的K8S 1.6.7环境安装CC[/title]

- 在master节点上创建一个secret以供cc容器在连接bigip设备时候使用, 替换用户名和密码为实际设备的

1kubectl create secret generic bigip-login --namespace kube-system --from-literal=username=admin --from-literal=password=admin - 使用官方提供的yaml文件部署cc

1234567891011121314151617181920212223242526272829303132333435363738394041424344apiVersion: extensions/v1beta1kind: Deploymentmetadata:name: k8s-bigip-ctlr-deploymentnamespace: kube-systemspec:replicas: 1template:metadata:name: k8s-bigip-ctlrlabels:app: k8s-bigip-ctlrspec:containers:- name: k8s-bigip-ctlr# replace the version as appropriate# (e.g., f5networks/k8s-bigip-ctlr:1.1.0-beta.1)image: "f5networks/k8s-bigip-ctlr:1.0.0"env:- name: BIGIP_USERNAMEvalueFrom:secretKeyRef:name: bigip-loginkey: username- name: BIGIP_PASSWORDvalueFrom:secretKeyRef:name: bigip-loginkey: passwordcommand: ["/app/bin/k8s-bigip-ctlr"]args: ["--bigip-username=$(BIGIP_USERNAME)","--bigip-password=$(BIGIP_PASSWORD)","--bigip-url=10.190.24.171","--bigip-partition=kubernetes",# To manage a single namespace, enter it below# (required in v1.0)# To manage all namespaces, omit the `namespace` entry# (default as of v1.1.0-beta.1 )# To manage multiple namespaces, enter a separate flag for each# below# (as of v1.1.0-beta.1 )"--namespace=default",]

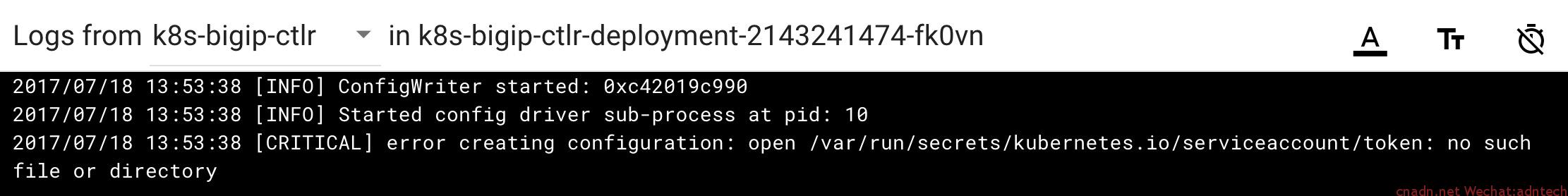

如果顺利的话,你会发现这个pod是失败的:),此时可以通过dashboard上查看pod的log,或者通过查看pod所mount的本地文件查看log(docker inpsect 容器名可以查看,io.kubernetes.container.logpath 项的值,类似/var/log/pods/b47ba7e6-6ba0-11e7-bdc9-000c29420d98/k8s-bigip-ctlr_0.log),或者使用kubectl logs命令查看,会发现cc提示如下文件或目录找不到的错误:

/var/run/secrets/kubernetes.io/serviceaccount/token

1234567// Possible returns true if loading an inside-kubernetes-cluster is possible.func (config *inClusterClientConfig) Possible() bool {fi, err := os.Stat("/var/run/secrets/kubernetes.io/serviceaccount/token")return os.Getenv("KUBERNETES_SERVICE_HOST") != "" &&os.Getenv("KUBERNETES_SERVICE_PORT") != "" &&err == nil && !fi.IsDir()}

这是由于之前配置api的时候设置了--admission-control=AlwaysAdmit. 而F5 CC作为一个pod来调用api的内部service时使用https,此时需要serviceaccount plugin的支持,要在准入控制里明确启用servcieaccount,即改为

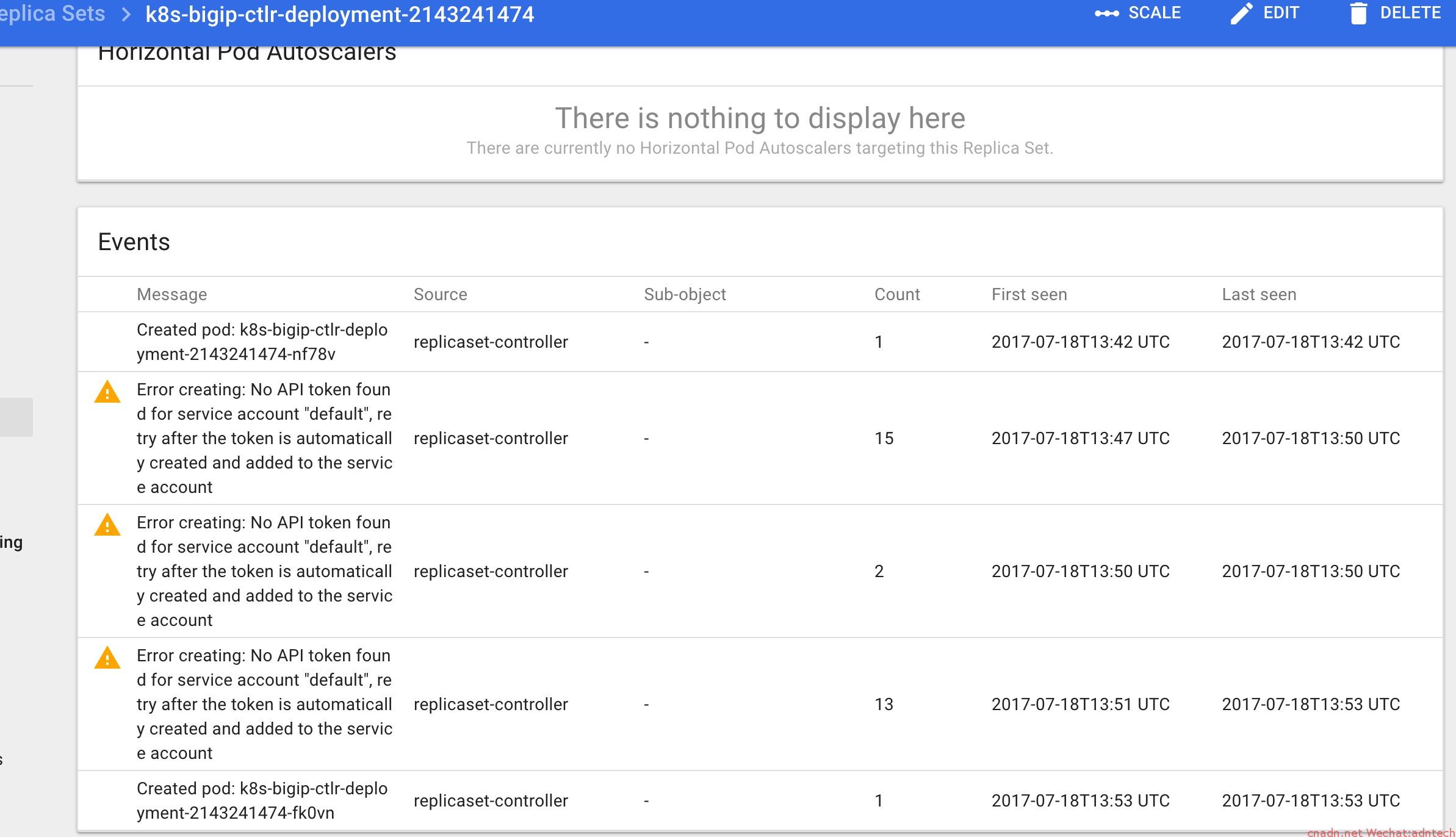

--admission-controlNamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota - 修改上述准入控制后,重启APIserver, 并重新部署cc,发现deployment可以成功,但是产生的replica set会报如下错误从而无法产生pod

这是由于系统内部default这个SA还没有产生对应的securit(由于目前整个集群配置都没有配置SSL证书,所以无法产生),系统要要为这些serviceaccount产生对应secret则需要配置使用SSL证书,因此要用相关CA来产生签名,可以自己产生一个CA,并为apiserver产生相关证书(实际上apiserver在未指定证书情况下,自己本身会产生一张证书/var/run/kubernetes/apiserver.crt 和apiserver.key), 这里采用自己创建的方式,具体步骤见这里 - 创建所有证书,修改master上所有组件启动配置,并重启服务后,可以看到系统自动产生了很多default secrets(controller server必须配置好相关与证书有关的flag重启后才可以看到这些secret,这些secret里都包含了ca.crt)

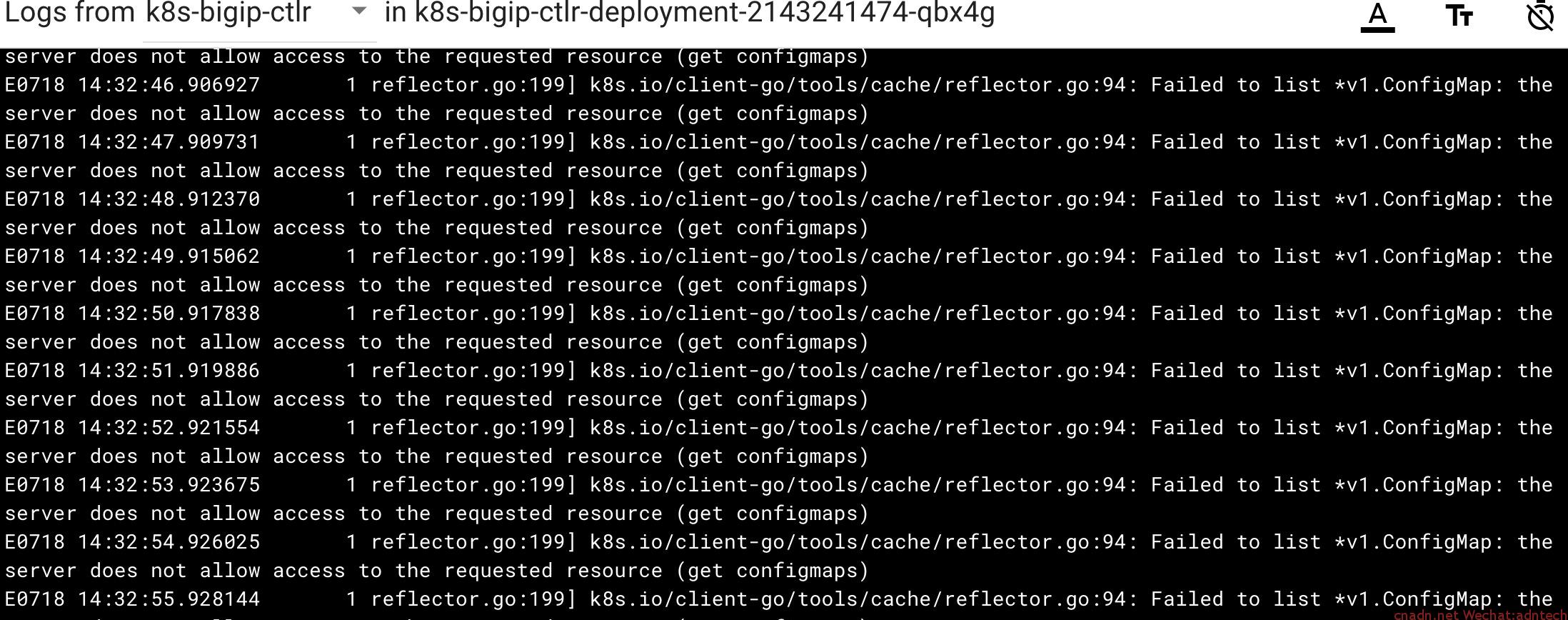

- 此时再查看cc相关的pod已经正常起来,不再有上面第3步中的错误,但是此时cc容器还是没有正常工作,查看其日志,可以看到一直报如下日志:

这是因为在1.6.7的RBAC授权模式下,default SA没有权限。 - 因此需要再创建pod时候指定一个ServiceAccount,并将该SA与clusterrole进行绑定以提供权限

A: 创建SA

123456[root@docker1 f5]# cat f5-service-account.yamlapiVersion: v1kind: ServiceAccountmetadata:name: f5bigipnamespace: kube-system

kubectl create -f f5-servcie-account.yaml执行完毕后,系统自动为该sa创建一个secret:

12345678910[root@docker1 f5]# kubectl get secrets --all-namespacesNAMESPACE NAME TYPE DATA AGEdefault default-token-x29k7 kubernetes.io/service-account-token 3 19mkube-public default-token-sbmhp kubernetes.io/service-account-token 3 19mkube-system bigip-login Opaque 2 1dkube-system bigip-login106 Opaque 2 22hkube-system default-token-s6zsf kubernetes.io/service-account-token 3 19mkube-system f5bigip-token-lh49k kubernetes.io/service-account-token 3 57skube-system kube-dns-token-472dk kubernetes.io/service-account-token 3 1dkube-system kubernetes-dashboard-token-xcq5w kubernetes.io/service-account-token 3 1dB: 执行角色绑定,来给f5bigip这个SA授权

1234567891011121314[root@docker1 f5]# cat f5-sa-cluster-role-binding.yamlkind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1beta1metadata:name: f5bigipnamespace: kube-systemsubjects:- kind: ServiceAccountnamespace: kube-systemname: f5bigiproleRef:kind: ClusterRolename: cluster-adminapiGroup: rbac.authorization.k8s.iokubectl create -f f5-sa-cluster-role-binding.yaml

执行后检查相关绑定:12345678910111213141516171819202122232425262728293031323334[root@docker1 scripts]# kubectl get clusterrolebindings -o wideNAME AGE ROLE USERS GROUPS SERVICEACCOUNTScluster-admin 1d cluster-admin system:mastersf5bigip 23h cluster-admin kube-system/f5bigipkubernetes-dashboard 1d cluster-admin kube-system/kubernetes-dashboardsystem:basic-user 1d system:basic-user system:authenticated, system:unauthenticatedsystem:controller:attachdetach-controller 1d system:controller:attachdetach-controller kube-system/attachdetach-controllersystem:controller:certificate-controller 1d system:controller:certificate-controller kube-system/certificate-controllersystem:controller:cronjob-controller 1d system:controller:cronjob-controller kube-system/cronjob-controllersystem:controller:daemon-set-controller 1d system:controller:daemon-set-controller kube-system/daemon-set-controllersystem:controller:deployment-controller 1d system:controller:deployment-controller kube-system/deployment-controllersystem:controller:disruption-controller 1d system:controller:disruption-controller kube-system/disruption-controllersystem:controller:endpoint-controller 1d system:controller:endpoint-controller kube-system/endpoint-controllersystem:controller:generic-garbage-collector 1d system:controller:generic-garbage-collector kube-system/generic-garbage-collectorsystem:controller:horizontal-pod-autoscaler 1d system:controller:horizontal-pod-autoscaler kube-system/horizontal-pod-autoscalersystem:controller:job-controller 1d system:controller:job-controller kube-system/job-controllersystem:controller:namespace-controller 1d system:controller:namespace-controller kube-system/namespace-controllersystem:controller:node-controller 1d system:controller:node-controller kube-system/node-controllersystem:controller:persistent-volume-binder 1d system:controller:persistent-volume-binder kube-system/persistent-volume-bindersystem:controller:pod-garbage-collector 1d system:controller:pod-garbage-collector kube-system/pod-garbage-collectorsystem:controller:replicaset-controller 1d system:controller:replicaset-controller kube-system/replicaset-controllersystem:controller:replication-controller 1d system:controller:replication-controller kube-system/replication-controllersystem:controller:resourcequota-controller 1d system:controller:resourcequota-controller kube-system/resourcequota-controllersystem:controller:route-controller 1d system:controller:route-controller kube-system/route-controllersystem:controller:service-account-controller 1d system:controller:service-account-controller kube-system/service-account-controllersystem:controller:service-controller 1d system:controller:service-controller kube-system/service-controllersystem:controller:statefulset-controller 1d system:controller:statefulset-controller kube-system/statefulset-controllersystem:controller:ttl-controller 1d system:controller:ttl-controller kube-system/ttl-controllersystem:discovery 1d system:discovery system:authenticated, system:unauthenticatedsystem:kube-controller-manager 1d system:kube-controller-manager system:kube-controller-managersystem:kube-dns 1d system:kube-dns kube-system/kube-dnssystem:kube-scheduler 1d system:kube-scheduler system:kube-schedulersystem:node 1d system:node system:nodessystem:node-proxier 1d system:node-proxier system:kube-proxy - 修改官方cc deployment文件,增加serviceAcountName配置:

1234567891011121314151617181920212223242526272829303132333435363738394041424344454647[root@docker1 f5]# cat f5-k8s-bigip-ctlr.yamlapiVersion: extensions/v1beta1kind: Deploymentmetadata:name: k8s-bigip-ctlr-deploymentnamespace: kube-systemspec:replicas: 1template:metadata:name: k8s-bigip-ctlrlabels:app: k8s-bigip-ctlrspec:serviceAccountName: f5bigipcontainers:- name: k8s-bigip-ctlr# replace the version as appropriate# (e.g., f5networks/k8s-bigip-ctlr:1.1.0-beta.1)image: "f5networks/k8s-bigip-ctlr:1.1.0-beta.1"env:- name: BIGIP_USERNAMEvalueFrom:secretKeyRef:name: bigip-loginkey: username- name: BIGIP_PASSWORDvalueFrom:secretKeyRef:name: bigip-loginkey: passwordcommand: ["/app/bin/k8s-bigip-ctlr"]args: ["--bigip-username=$(BIGIP_USERNAME)","--bigip-password=$(BIGIP_PASSWORD)","--bigip-url=10.200.145.56","--bigip-partition=kubernetes","--pool-member-type=nodeport",# To manage a single namespace, enter it below# (required in v1.0)# To manage all namespaces, omit the `namespace` entry# (default as of v1.1.0-beta.1 )# To manage multiple namespaces, enter a separate flag for each# below# (as of v1.1.0-beta.1 )"--namespace=default",]

由于之前已create了,这里重新apply修改后的yaml, kubectl apply -f f5-k8s-bigip-ctlr.yaml,再查看cc pod,发现已正常运行, 日志如下:

[root@docker1 f5]# kubectl logs k8s-bigip-ctlr-deployment-4170992206-mckz7 -n kube-system

2017/07/18 14:49:16 [INFO] ConfigWriter started: 0xc4200c56b0

2017/07/18 14:49:16 [INFO] Started config driver sub-process at pid: 10

2017/07/18 14:49:16 [INFO] NodePoller (0xc420413980) registering new listener: 0x405d70

2017/07/18 14:49:16 [INFO] NodePoller started: (0xc420413980)

2017/07/18 14:49:16 [INFO] ProcessNodeUpdate: Change in Node state detected

2017/07/18 14:49:16 [INFO] Wrote 0 Virtual Server configs

2017/07/18 14:49:18 [INFO] [2017-07-18 14:49:18,068 __main__ INFO] entering inotify loop to watch /tmp/k8s-bigip-ctlr.config036477452/config.json

[title]与BIGIP联动测试[/title]

- BIGIP要与k8s在网络上路由可达,这里使用service所发布的网络与BIGIP互联

- BIGIP上要提前配置好自定义的partition,该partition名称在上述cc部署文件里已指定为 kubernetes

- k8s内要先启动一个业务service,这里以nginx为例:

1234[root@docker1 scripts]# kubectl get svc -o wideNAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTORkubernetes 169.169.0.1 <none> 443/TCP 1d <none>my-mynginx-service 169.169.38.100 <nodes> 80:30910/TCP 4h run=k8s-nginx - 创建一个F5 configmap

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748[root@docker1 f5]# cat f5-vs-config.yaml# Note:# "bindAddr" sets the IP address of the BIG-IP front-end virtual server //# omit "bindAddr" if you want to create a pool without a virtual server //kind: ConfigMapapiVersion: v1metadata:# name of the resource to create on the BIG-IPname: k8s.vs# the namespace to create the object in# As of v1.1.0-beta.1, the k8s-bigip-ctlr watches all namespaces by default# If the k8s-bigip-ctlr is watching a specific namespace(s),# this setting must match the namespace of the Service you want to proxy# -AND- the namespace(s) the k8s-bigip-ctlr watchesnamespace: defaultlabels:# the type of resource you want to create on the BIG-IPf5type: virtual-serverdata:# As of v1.1.0-beta.1, set the schema to "f5schemadb://bigip-virtual-server_v0.1.3.json"schema: "f5schemadb://bigip-virtual-server_v0.1.3.json"data: |{"virtualServer": {"backend": {#注意这里的service port一定要永远和pod的port一致,不管F5 cc yaml部署文件里是定义的何种类型service"servicePort": 80,#servcieName要等同于kubectl get svc中的svc输出"serviceName": "my-mynginx-service","healthMonitors": [{"interval": 30,"protocol": "http","send": "GET","timeout": 91}]},"frontend": {"virtualAddress": {"port": 80,"bindAddr": "192.168.188.188"},"partition": "kubernetes","balance": "round-robin","mode": "http"}}}

执行 kubectl create -f f5-vs-config.yaml - 检查F5 BIGIP已产生配置

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051root@(dc1-v12-1)(cfg-sync Disconnected)(Active)(/Common)(tmos)# cd ../kubernetesroot@(dc1-v12-1)(cfg-sync Disconnected)(Active)(/kubernetes)(tmos)# list ltm virtualltm virtual default_k8s.vs {destination 192.168.188.188:httpip-protocol tcpmask 255.255.255.255partition kubernetespool default_k8s.vsprofiles {/Common/http { }/Common/tcp { }}source 0.0.0.0/0source-address-translation {type automap}translate-address enabledtranslate-port enabledvs-index 12}root@(dc1-v12-1)(cfg-sync Disconnected)(Active)(/kubernetes)(tmos)# list ltm poolltm pool default_k8s.vs {members {172.16.199.27:30910 {address 172.16.199.27session monitor-enabledstate down}172.16.199.37:30910 {address 172.16.199.37session monitor-enabledstate down}}monitor default_k8s.vspartition kubernetes}root@(dc1-v12-1)(cfg-sync Disconnected)(Active)(/kubernetes)(tmos)# list ltm monitorltm monitor http default_k8s.vs {adaptive disableddefaults-from /Common/httpdestination *:*interval 30ip-dscp 0partition kubernetesrecv nonerecv-disable nonesend GETtime-until-up 0timeout 91} - nodeport方式下扩容、缩减测试

首先检查当前的deployment只要求部署一个pod实例,为什么在BIGIP上产生两个node节点的IP(测试环境是1master+2 nodes),这是因为当前以nodeport模式配置BIGIP,而k8s在发布nodeport的service时候是所有node节点都会产生一个本地port并通过iptables实现内部node间流量的LB的,因此F5有必要加入所有node地址以防止局部节点失效。因此在nodeport模式下,pool member永远是等于实际node数量的,不管pod实例是多于node数量还是少于node数量,这里实际数据path是: client--》F5bigip--》nodeip:nodeport--》k8s service内部LB - clusterIP方式加扩容,缩减测试

A 修改CC 部署模式为cluster方式

1234567891011121314151617181920212223242526272829303132333435363738394041424344454647[root@docker1 scripts]# kubectl edit deploy/k8s-bigip-ctlr-deployment -n kube-system# Please edit the object below. Lines beginning with a '#' will be ignored,# and an empty file will abort the edit. If an error occurs while saving this file will be# reopened with the relevant failures.#apiVersion: extensions/v1beta1kind: Deploymentmetadata:annotations:deployment.kubernetes.io/revision: "2"kubectl.kubernetes.io/last-applied-configuration: |{"apiVersion":"extensions/v1beta1","kind":"Deployment","metadata":{"annotations":{},"name":"k8s-bigip-ctlr-deployment","namespace":"kube-system"},"spec":{"replicas":1,"template":{"metadata":{"labels":{"app":"k8s-bigip-ctlr"},"name":"k8s-bigip-ctlr"},"spec":{"containers":[{"args":["--bigip-username=$(BIGIP_USERNAME)","--bigip-password=$(BIGIP_PASSWORD)","--bigip-url=10.200.145.56","--bigip-partition=kubernetes","--pool-member-type=nodeport","--namespace=default"],"command":["/app/bin/k8s-bigip-ctlr"],"env":[{"name":"BIGIP_USERNAME","valueFrom":{"secretKeyRef":{"key":"username","name":"bigip-login"}}},{"name":"BIGIP_PASSWORD","valueFrom":{"secretKeyRef":{"key":"password","name":"bigip-login"}}}],"image":"f5networks/k8s-bigip-ctlr:1.1.0-beta.1","name":"k8s-bigip-ctlr"}],"serviceAccountName":"f5bigip"}}}}creationTimestamp: 2017-07-18T14:01:09Zgeneration: 2labels:app: k8s-bigip-ctlrname: k8s-bigip-ctlr-deploymentnamespace: kube-systemresourceVersion: "23031"selfLink: /apis/extensions/v1beta1/namespaces/kube-system/deployments/k8s-bigip-ctlr-deploymentuid: 8d012bab-6bc1-11e7-b522-000c29420d98spec:replicas: 1selector:matchLabels:app: k8s-bigip-ctlrstrategy:rollingUpdate:maxSurge: 1maxUnavailable: 1type: RollingUpdatetemplate:metadata:creationTimestamp: nulllabels:app: k8s-bigip-ctlrname: k8s-bigip-ctlrspec:containers:- args:- --bigip-username=$(BIGIP_USERNAME)- --bigip-password=$(BIGIP_PASSWORD)- --bigip-url=10.200.145.56- --bigip-partition=kubernetes#这里修改为cluster值,然后:wq保存修改- --pool-member-type=nodeport- --namespace=default

B 修改完毕保存后,直接查看F5 BIGIP,发现配置已经修改为pod的IP,且只有一个IP,这是因为当前nginx的部署只配置启动一个pod实例

123456789101112root@(dc1-v12-1)(cfg-sync Disconnected)(Active)(/kubernetes)(tmos)# list ltm poolltm pool default_k8s.vs {members {10.2.44.3:http {address 10.2.44.3session monitor-enabledstate checking}}monitor default_k8s.vspartition kubernetes}

C 扩容nginx实例kubectl scale --replicas=3 deploy/k8s-nginx

查看F5配置变化,已变为3个IP:12345678910111213141516171819202122root@(dc1-v12-1)(cfg-sync Disconnected)(Active)(/kubernetes)(tmos)# list ltm poolltm pool default_k8s.vs {members {10.2.2.2:http {address 10.2.2.2session monitor-enabledstate checking}10.2.2.3:http {address 10.2.2.3session monitor-enabledstate checking}10.2.44.3:http {address 10.2.44.3session monitor-enabledstate down}}monitor default_k8s.vspartition kubernetes}这种方式下,BIGIP需要能够直接访问到pod的网络

看上去折腾了半天,那么用CC的好处是什么?直接写程序获取APIserver输出,然后通过F5的Restful接口来更新配置不就可以了么?欢迎深入讨论。

目前CC通过configmap来对F5的配置参数还比较有限,不过CC本身是开源的,https://github.com/F5Networks/k8s-bigip-ctlr 有兴趣的话可以再开发复杂功能。

目前configmap定义中还不能引用svc的label,这限制了一些灵活性,比如如果希望将某些带有特殊label的svc配置到某个独立的BIGIP上,则当前不能在一个configmap就完成,

文章评论

F5的这个CIS方案有商业机构在对接K8S服务暴露上使用么?

@kkfinkkfin 有不少已投产案例