环境:

主机1: master+node1

主机2: node2

主机在同一个二层网络里互通

原来环境使用canal,flannel部分采用vxlan

首先通过kubectl delete -f canal.yaml删除原来的相关容器

删除后,系统遗留flannel.1网卡接口以及相关静态fdb表需要手工删除,如果不删除系统将继续使用以前的flannel以及相关路由,calico不能正确的创建相关路由条目

|

1 2 |

[root@k8s-master calico]# ip link delete flannel.1 [root@k8s-node1 ~]# bridge fdb de:dc:e2:5c:ea:50 dev flannel.1 dst 172.16.40.199 |

参考 https://docs.projectcalico.org/v3.4/getting-started/kubernetes/installation/calico#installing-with-the-kubernetes-api-datastore50-nodes-or-less 直接部署, 这里采用kubernetes存储模式因为是测试环境,规模很小,直接用kubernetes模式免得折腾etcd相关证书配置了, pod的地址分配采取了是host-local,由kube-controller-manager直接管理:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

[root@k8s-master ~]# cat /etc/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] ExecStart=/usr/local/bin/kube-controller-manager \ --address=0.0.0.0 \ --allocate-node-cidrs=true \ --cluster-cidr=10.244.0.0/16 \ --cluster-name=kubernetes \ --cluster-signing-cert-file=/var/lib/kubernetes/ca.pem \ --cluster-signing-key-file=/var/lib/kubernetes/ca-key.pem \ --leader-elect=true \ --master=http://127.0.0.1:8080 \ --enable-hostpath-provisioner=true \ --root-ca-file=/var/lib/kubernetes/ca.pem \ --service-account-private-key-file=/var/lib/kubernetes/ca-key.pem \ --service-cluster-ip-range=10.250.0.0/24 \ --v=1 Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target |

部署完毕后,可以看到主机路由如下

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

[root@k8s-master calico]# ip route default via 172.16.10.254 dev ens33 proto static metric 100 default via 172.16.40.254 dev ens160 proto dhcp metric 101 blackhole 10.244.0.0/24 proto bird 10.244.0.76 dev cali7adc62d51c0 scope link 10.244.0.77 dev cali0761ccbeace scope link 10.244.0.78 dev calicd88c4c95c8 scope link 10.244.0.79 dev cali3b3d3e2702c scope link 10.244.0.80 dev calif1c9cee6be2 scope link 10.244.0.81 dev calie89075bc69f scope link 10.244.1.0/24 via 172.16.40.198 dev tunl0 proto bird onlink 172.16.0.0/16 dev ens33 proto kernel scope link src 172.16.10.201 metric 100 172.16.40.0/24 dev ens160 proto kernel scope link src 172.16.40.199 metric 101 172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 |

10.244.1.0/24 via 172.16.40.198 dev tunl0 proto bird onlink

可以看到去往node2 pod网络的路由是经过tunl0接口,宿主机接口情况如下

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 |

[root@k8s-master bin]# ifconfig cali0761ccbeace: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet6 fe80::4c50:7dff:fea5:3d83 prefixlen 64 scopeid 0x20<link> ether 4e:50:7d:a5:3d:83 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 cali3b3d3e2702c: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet6 fe80::d036:88ff:fec8:4f97 prefixlen 64 scopeid 0x20<link> ether d2:36:88:c8:4f:97 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 cali7adc62d51c0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet6 fe80::b4e0:4dff:fef5:561d prefixlen 64 scopeid 0x20<link> ether b6:e0:4d:f5:56:1d txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 calicd88c4c95c8: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet6 fe80::ac93:afff:fe52:ebeb prefixlen 64 scopeid 0x20<link> ether ae:93:af:52:eb:eb txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 calie89075bc69f: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet6 fe80::bc14:91ff:fe5e:5f78 prefixlen 64 scopeid 0x20<link> ether be:14:91:5e:5f:78 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 calif1c9cee6be2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet6 fe80::2491:78ff:fe53:93d1 prefixlen 64 scopeid 0x20<link> ether 26:91:78:53:93:d1 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255 ether 02:42:e5:94:23:b6 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.16.10.201 netmask 255.255.0.0 broadcast 172.16.255.255 inet6 fe80::93eb:77c9:718:43d2 prefixlen 64 scopeid 0x20<link> ether 00:50:56:b3:46:85 txqueuelen 1000 (Ethernet) RX packets 725730 bytes 333845418 (318.3 MiB) RX errors 0 dropped 29 overruns 0 frame 0 TX packets 939268 bytes 850995591 (811.5 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 ens160: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.16.40.199 netmask 255.255.255.0 broadcast 172.16.40.255 inet6 fe80::a35:8f62:68df:ae99 prefixlen 64 scopeid 0x20<link> ether 00:50:56:b3:09:f2 txqueuelen 1000 (Ethernet) RX packets 174953 bytes 18365369 (17.5 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 137582 bytes 20265910 (19.3 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1000 (Local Loopback) RX packets 11693082 bytes 3134807371 (2.9 GiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 11693082 bytes 3134807371 (2.9 GiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 tunl0: flags=193<UP,RUNNING,NOARP> mtu 1440 inet 10.244.0.1 netmask 255.255.255.255 tunnel txqueuelen 1000 (IPIP Tunnel) RX packets 6 bytes 504 (504.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 6 bytes 504 (504.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

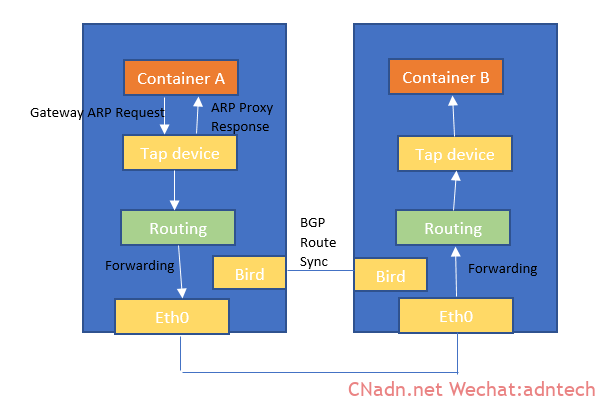

下图是calico原理图,在ipip模式下实际会做tunl0接口

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 |

[root@k8s-master calico]# calicoctl get node -o yaml apiVersion: projectcalico.org/v3 items: - apiVersion: projectcalico.org/v3 kind: Node metadata: creationTimestamp: 2018-08-05T14:42:30Z name: k8s-master resourceVersion: "9521989" uid: c7b4efba-98bd-11e8-aeed-000c29850765 spec: bgp: ipv4Address: 172.16.40.199/24 ipv4IPIPTunnelAddr: 10.244.0.1 - apiVersion: projectcalico.org/v3 kind: Node metadata: creationTimestamp: 2018-08-05T17:10:45Z name: k8s-node1 resourceVersion: "9521986" uid: 7dff767b-98d2-11e8-aeed-000c29850765 spec: bgp: ipv4Address: 172.16.40.198/24 ipv4IPIPTunnelAddr: 10.244.1.1 kind: NodeList metadata: {} [root@k8s-master calico]# calicoctl get ipPool -o yaml apiVersion: projectcalico.org/v3 items: - apiVersion: projectcalico.org/v3 kind: IPPool metadata: creationTimestamp: 2019-01-04T12:05:32Z name: default-ipv4-ippool resourceVersion: "9515496" uid: 08ffa4b6-1019-11e9-9cec-005056b34685 spec: blockSize: 26 cidr: 10.244.0.0/16 ipipMode: Always natOutgoing: true kind: IPPoolList metadata: resourceVersion: "9522097" |

如果将上面的ipipMode修改为never,则路由表中走tunl0的路由会消失,转而变成走BGP路由

10.244.1.0/24 via 172.16.40.198 dev ens160 proto bird

对于calico 路由模式来说,一般最常见的有两种:

- 基于以太网二层直连的宿主机之间构建一个BGP路由网络

- 基于宿主机IP跨路由的全BGP网络,即宿主机之间的外部网络本身也是BGP

其实还有一种就是宿主机之间本身路由不是BGP,比如是ospf,如果希望外部的网络也能获得pod网络路由,那就需要将bgp重分发到igp中,这个一般好像不太这么去做。。

文章评论